Scan Documents Using Camera on Multiple Platforms: A Flutter Guide for Windows, Android, iOS, and Web

In today’s fast-paced, digital-first world, the demand for paperless solutions and efficient document management is on the rise. By leveraging the device’s camera, we can convert hard-copy documents into digital formats in mere seconds. Computer vision technology can transform our smartphones and computers into versatile scanning tools, enabling us to digitize documents swiftly. In this article, we will guide you through the process of creating a document scanner app using Flutter and the Dynamsoft Document Normalizer SDK, a solution that is operable on Windows, Android, iOS, and the Web.

This article is Part 3 in a 11-Part Series.

- Part 1 - How to Turn Smartphone into a Wireless Keyboard and Barcode QR Scanner in Flutter

- Part 2 - How to Build Windows Desktop Barcode QR Scanner in Flutter

- Part 3 - Scan Documents Using Camera on Multiple Platforms: A Flutter Guide for Windows, Android, iOS, and Web

- Part 4 - How to Build a Prototype for a Last-Mile Delivery App with Flutter and Dynamsoft Vision SDKs

- Part 5 - How to Create a Cross-platform MRZ Scanner App Using Flutter and Dynamsoft Capture Vision

- Part 6 - How to Build a Barcode Scanner App with Flutter Step by Step

- Part 7 - Building an AR-Enhanced Pharma Lookup App with Flutter, Dynamsoft Barcode SDK and Database

- Part 8 - Implementing Flutter Barcode Scanner with Kotlin and CameraX for Android

- Part 9 - Building Multiple Barcode, QR Code and DataMatrix Scanner with Flutter for Inventory Management

- Part 10 - Implementing Flutter QR Code Scanner with Swift and AVFoundation for iOS

- Part 11 - Building a Cross-Platform Barcode Scanner for Mobile, Desktop, and Web with Flutter

Development Environment

- Flutter 3.10.2

- Dart 3.0.2

Required Flutter Plugins

To implement the document scanner app, we need to install the following packages:

- flutter_document_scan_sdk: A Flutter plugin to detect and rectify documents based on the Dynamsoft Document Normalizer SDK, supporting Android, iOS, Windows, Linux and the Web. The plugin is implemented in platform-specific code, including Java, Swift, C++ and JavaScript.

- flutter_exif_rotation: A Flutter plugin to rotate images by reading the Exif data of the image. In portrait mode on iOS, it fixes the images that are rotated 90 degrees counterclockwise.

- file_selector: A Flutter plugin for selecting image files on Windows.

- image_picker: A Flutter plugin for picking image files on Android and iOS.

- camera_windows: A Flutter Windows camera plugin ported from https://github.com/flutter/packages/tree/main/packages/camera/camera_windows. It provides a callback function for receiving camera frames.

- camera: A Flutter camera plugin for Android, iOS and web.

Getting Flutter Camera Frames on Android, iOS, Web and Windows

The development progress of the official Flutter camera plugin has been notably slow. Its startImageStream() method returns the latest streaming images, which can be utilized for image processing tasks. However, this function is only available for Android and iOS. Here is the code snippet:

await _controller!.startImageStream((CameraImage availableImage) async {

assert(defaultTargetPlatform == TargetPlatform.android ||

defaultTargetPlatform == TargetPlatform.iOS);

// image processing

});

For web applications, to acquire camera frames for image processing, you can call takePicture() method repeatedly using a timer:

Future<void> decodeFrames() async {

Future.delayed(const Duration(milliseconds: 20), () async {

XFile file = await _controller!.takePicture();

// image processing

decodeFrames();

});

}

When used on a web application, the method returns an XFile as a blob object. A blob object holds image data in memory, which makes its operation performance swift. Conversely, on Windows, the method engages disk I/O, which isn’t ideal for real-time image processing. As an alternative to the official Windows camera plugin, which is currently under development, we can utilize the ported plugin as follows:

_frameAvailableStreamSubscription =

(CameraPlatform.instance as CameraWindows)

.onFrameAvailable(cameraId)

.listen(_onFrameAvailable);

void _onFrameAvailable(FrameAvailabledEvent event) {

if (mounted) {

Map<String, dynamic> map = event.toJson();

final Uint8List? data = map['bytes'] as Uint8List?;

// image processing

}

}

Please bear in mind: All image processing methods specific to different platforms should be implemented in an asynchronous manner to prevent the Dart thread from getting blocked.

How to Implement Platform-Specific Asynchronous Methods in Flutter

The image processing methods are CPU-intensive and time-consuming. Therefore, we need to put them into worker threads.

Android (Java)

On Android, we can use the single thread executor to run the image processing methods in a worker thread:

final Executor mExecutor = Executors.newSingleThreadExecutor();

List<Map<String, Object>> out = new ArrayList<>();

final byte[] bytes = call.argument("bytes");

final int width = call.argument("width");

final int height = call.argument("height");

final int stride = call.argument("stride");

final int format = call.argument("format");

final Result r = result;

mExecutor.execute(new Runnable() {

@Override

public void run() {

ImageData imageData = new ImageData();

imageData.bytes = bytes;

imageData.width = width;

imageData.height = height;

imageData.stride = stride;

imageData.format = format;

List<Map<String, Object>> tmp = new ArrayList<>();

try {

DetectedQuadResult[] detectedResults = mNormalizer.detectQuad(imageData);

} catch (DocumentNormalizerException e) {

e.printStackTrace();

}

final List<Map<String, Object>> out = tmp;

mHandler.post(new Runnable() {

@Override

public void run() {

r.success(out);

}

});

}

});

iOS (Swift)

On iOS, we can use DispatchQueue to run the image processing methods in a worker thread:

let arguments: NSDictionary = call.arguments as! NSDictionary

DispatchQueue.global().async {

let out = NSMutableArray()

let buffer:FlutterStandardTypedData = arguments.value(forKey: "bytes") as! FlutterStandardTypedData

let w:Int = arguments.value(forKey: "width") as! Int

let h:Int = arguments.value(forKey: "height") as! Int

let stride:Int = arguments.value(forKey: "stride") as! Int

let format:Int = arguments.value(forKey: "format") as! Int

let enumImagePixelFormat = EnumImagePixelFormat(rawValue: format)

let data = iImageData()

data.bytes = buffer.data

data.width = w

data.height = h

data.stride = stride

data.format = enumImagePixelFormat!

let detectedResults = try? self.normalizer!.detectQuadFromBuffer(data)

}

Web (JavaScript)

On the Web, the JavaScript detection method is asynchronous by default. We can directly call the method in Dart:

String pixelFormat = 'rgba';

if (format == ImagePixelFormat.IPF_GRAYSCALED.index) {

pixelFormat = 'grey';

} else if (format == ImagePixelFormat.IPF_RGB_888.index) {

pixelFormat = 'rgb';

} else if (format == ImagePixelFormat.IPF_BGR_888.index) {

pixelFormat = 'bgr';

} else if (format == ImagePixelFormat.IPF_ARGB_8888.index) {

pixelFormat = 'rgba';

} else if (format == ImagePixelFormat.IPF_ABGR_8888.index) {

pixelFormat = 'bgra';

}

List<dynamic> results = await handleThenable(_normalizer!.detectQuad(

parse(jsonEncode({

'data': bytes,

'width': width,

'height': height,

'pixelFormat': pixelFormat,

})),

true));

Windows (C++)

It takes several steps to implement asynchronous methods in C++:

- Create a vector to store the

flutter::MethodResultobjects and a worker thread to run the image processing tasks:class WorkerThread { public: std::mutex m; std::condition_variable cv; std::queue<Task> tasks = {}; volatile bool running; std::thread t; }; vector<std::unique_ptr<flutter::MethodResult<flutter::EncodableValue>>> pendingResults = {}; WorkerThread *worker; void Init() { worker = new WorkerThread(); worker->running = true; worker->t = thread(&run, this); } - Queue the detection task to the worker thread:

void DetectBuffer(std::unique_ptr<flutter::MethodResult<flutter::EncodableValue>>& pendingResult, const unsigned char * buffer, int width, int height, int stride, int format) { pendingResults.push_back(std::move(pendingResult)); queueTask((unsigned char*)buffer, width, height, stride, format, stride * height); } void queueTask(unsigned char* imageBuffer, int width, int height, int stride, int format, int len) { unsigned char *data = (unsigned char *)malloc(len); memcpy(data, imageBuffer, len); std::unique_lock<std::mutex> lk(worker->m); clearTasks(); std::function<void()> task_function = std::bind(processBuffer, this, data, width, height, stride, format); Task task; task.func = task_function; task.buffer = data; worker->tasks.push(task); worker->cv.notify_one(); lk.unlock(); } - After running document detection, pop the pending result object from the vector and return the detected quadrilaterals:

static void processBuffer(DocumentManager *self, unsigned char * buffer, int width, int height, int stride, int format) { ImageData data; data.bytes = buffer; data.width = width; data.height = height; data.stride = stride; data.format = self->getPixelFormat(format); data.bytesLength = stride * height; data.orientation = 0; DetectedQuadResultArray *pResults = NULL; int ret = DDN_DetectQuadFromBuffer(self->normalizer, &data, "", &pResults); if (ret) { printf("Detection error: %s\n", DC_GetErrorString(ret)); } free(buffer); EncodableList results = self->WrapResults(pResults); std::unique_ptr<flutter::MethodResult<flutter::EncodableValue>> result = std::move(self->pendingResults.front()); self->pendingResults.erase(self->pendingResults.begin()); result->Success(results); }

Using the Flutter Document Detection Methods

The flutter_document_scan_sdk package offers two methods for document detection: one method handles files while the other deals with buffers.

Future<List<DocumentResult>?> detectFile(String file)Future<List<DocumentResult>?> detectBuffer(Uint8List bytes, int width, int height, int stride, int format)

Let’s explore how to utilize the API across different platforms.

Android and iOS

Image formats and orientation can differ between Android and iOS. It’s important to address these differences when handling images on these platforms.

Check the camera image format and call the detectBuffer() method:

int format = ImagePixelFormat.IPF_NV21.index;

switch (availableImage.format.group) {

case ImageFormatGroup.yuv420:

format = ImagePixelFormat.IPF_NV21.index;

break;

case ImageFormatGroup.bgra8888:

format = ImagePixelFormat.IPF_ARGB_8888.index;

break;

default:

format = ImagePixelFormat.IPF_RGB_888.index;

}

flutterDocumentScanSdkPlugin

.detectBuffer(

availableImage.planes[0].bytes,

availableImage.width,

availableImage.height,

availableImage.planes[0].bytesPerRow,

format)

.then((results) {});

When scanning documents in portrait mode on Android, the orientation of the detected document corners needs to be adjusted by rotating them 90 degrees:

if (MediaQuery.of(context).size.width <

MediaQuery.of(context).size.height) {

if (Platform.isAndroid) {

results = rotate90(results);

}

}

List<DocumentResult> rotate90(List<DocumentResult>? input) {

if (input == null) {

return [];

}

List<DocumentResult> output = [];

for (DocumentResult result in input) {

double x1 = result.points[0].dx;

double x2 = result.points[1].dx;

double x3 = result.points[2].dx;

double x4 = result.points[3].dx;

double y1 = result.points[0].dy;

double y2 = result.points[1].dy;

double y3 = result.points[2].dy;

double y4 = result.points[3].dy;

List<Offset> points = [

Offset(_previewSize!.height.toInt() - y1, x1),

Offset(_previewSize!.height.toInt() - y2, x2),

Offset(_previewSize!.height.toInt() - y3, x3),

Offset(_previewSize!.height.toInt() - y4, x4)

];

DocumentResult newResult = DocumentResult(result.confidence, points, []);

output.add(newResult);

}

return output;

}

Web

On the web, we can call the detectFile() method:

XFile file = await _controller!.takePicture();

_detectionResults =

await flutterDocumentScanSdkPlugin.detectFile(file.path);

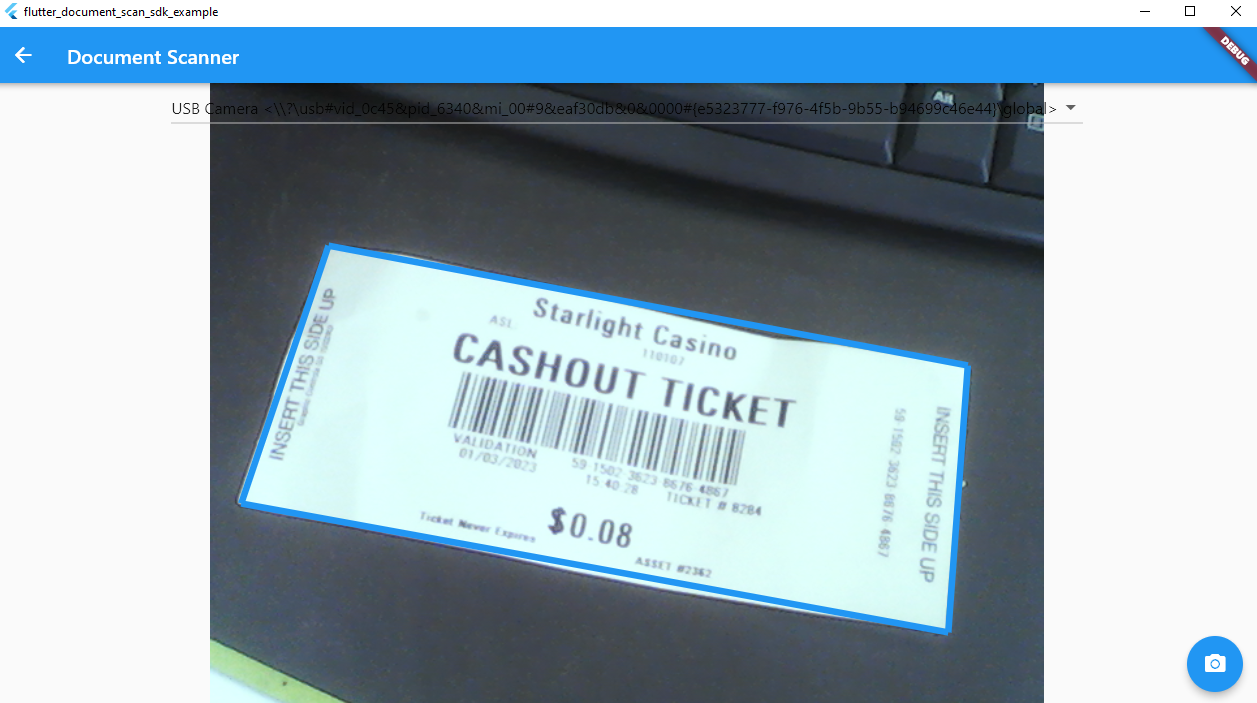

Windows

On Windows, we can call the detectBuffer() method:

int width = _previewSize!.width.toInt();

int height = _previewSize!.height.toInt();

flutterDocumentScanSdkPlugin

.detectBuffer(data, width, height, width * 4,

ImagePixelFormat.IPF_ARGB_8888.index)

.then((results) {});

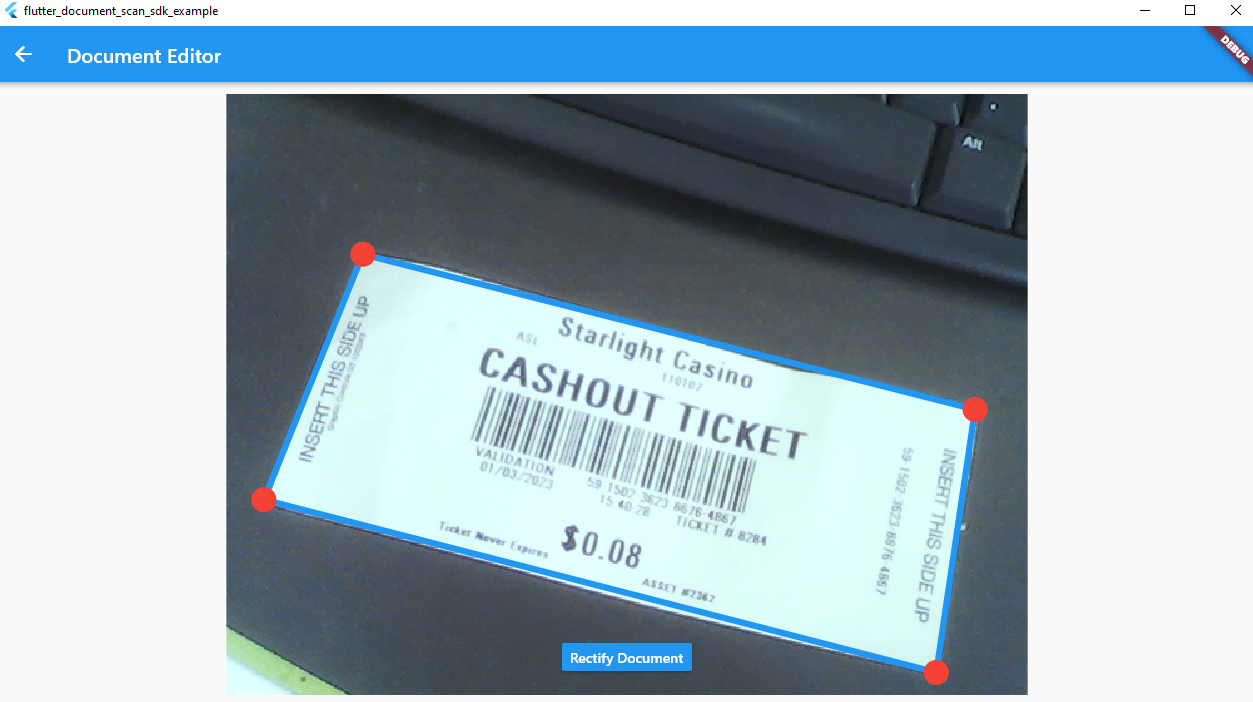

How to Create a Flutter Overlay for Editing Document Edges

Edge detection may not be entirely accurate. In order to crop the document precisely, it’s essential to provide a user interface that enables the editing of document edges.

The following steps demonstrate how to create a Flutter overlay for editing document edges:

- Create a

CustomPainterto draw the image and quadrilaterals.class ImagePainter extends CustomPainter { ImagePainter(this.image, this.results); ui.Image? image; final List<DocumentResult> results; @override void paint(Canvas canvas, Size size) { final paint = Paint() ..color = Colors.blue ..strokeWidth = 5 ..style = PaintingStyle.stroke; if (image != null) { canvas.drawImage(image!, Offset.zero, paint); } Paint circlePaint = Paint() ..color = Colors.red ..style = PaintingStyle.fill; for (var result in results) { canvas.drawLine(result.points[0], result.points[1], paint); canvas.drawLine(result.points[1], result.points[2], paint); canvas.drawLine(result.points[2], result.points[3], paint); canvas.drawLine(result.points[3], result.points[0], paint); if (image != null) { canvas.drawCircle(result.points[0], 10, circlePaint); canvas.drawCircle(result.points[1], 10, circlePaint); canvas.drawCircle(result.points[2], 10, circlePaint); canvas.drawCircle(result.points[3], 10, circlePaint); } } } @override bool shouldRepaint(ImagePainter oldDelegate) => true; } - Wrap the

CustomPaintwidget with aGestureDetectorto handle the user’s touch events. As theonPanUpdateevent is triggered, we can check whether the user’s finger is close to the document corners. If so, we can update the coordinates and redraw the document edges.SizedBox( width: MediaQuery.of(context).size.width, height: MediaQuery.of(context).size.height - MediaQuery.of(context).padding.top - 80, child: FittedBox( fit: BoxFit.contain, child: SizedBox( width: image!.width.toDouble(), height: image!.height.toDouble(), child: GestureDetector( onPanUpdate: (details) { if (details.localPosition.dx < 0 || details.localPosition.dy < 0 || details.localPosition.dx > image!.width || details.localPosition.dy > image!.height) { return; } for (int i = 0; i < detectionResults!.length; i++) { for (int j = 0; j < detectionResults![i].points.length; j++) { if ((detectionResults![i].points[j] - details.localPosition) .distance < 20) { bool isCollided = false; for (int index = 1; index < 4; index++) { int otherIndex = (j + 1) % 4; if ((detectionResults![i].points[otherIndex] - details.localPosition) .distance < 20) { isCollided = true; return; } } setState(() { if (!isCollided) { detectionResults![i].points[j] = details.localPosition; } }); } } } }, child: CustomPaint( painter: ImagePainter(image, detectionResults!), ), ))))

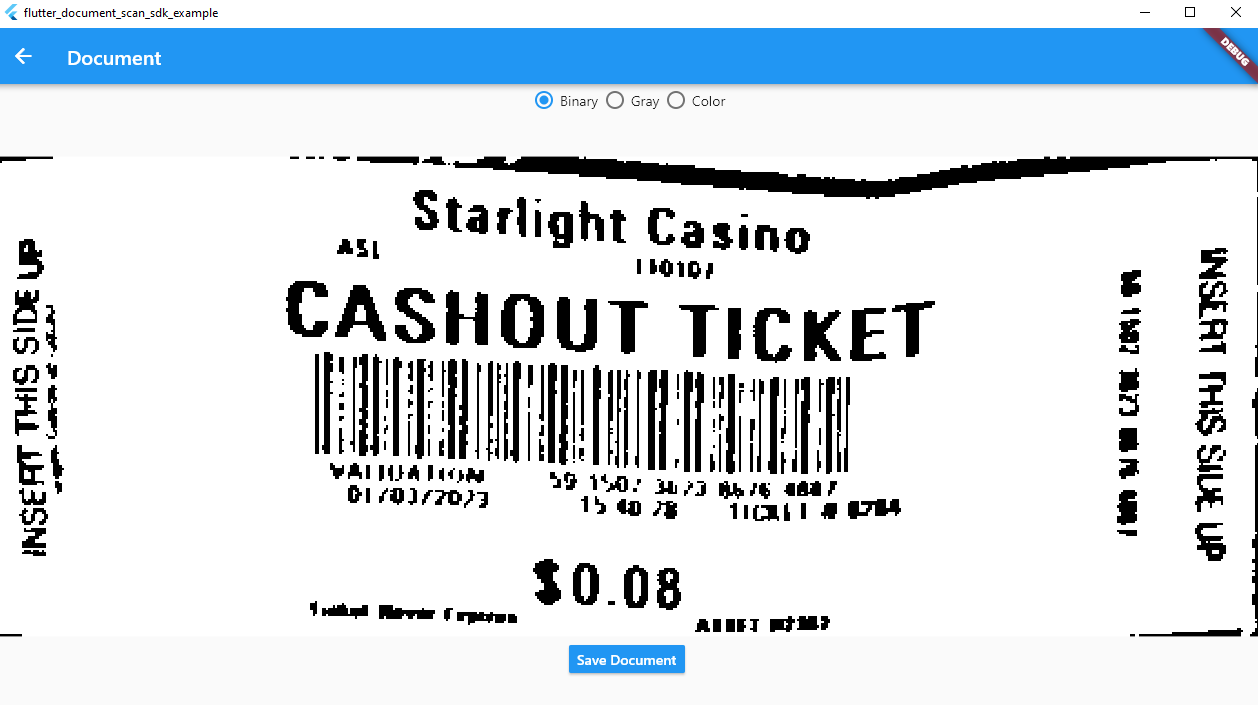

Rectifying Documents Using the Normalize Methods

The SDK offers two methods for document rectification:

Future<NormalizedImage?> normalizeFile(String file, dynamic points)Future<NormalizedImage?> normalizeBuffer(Uint8List bytes, int width, int height, int stride, int format, dynamic points)

The points parameter represents the document corners.

Due to the possibility of the detection method returning multiple results, we must choose one result for rectification.

normalizedImage = await normalizeBuffer(

widget.sourceImage, detectionResults[0].points);

normalizedImage =

await flutterDocumentScanSdkPlugin.normalizeFile(file, detectionResults[0].points);

Run and Test the App

- Flutter Web

flutter run -d chrome - Flutter Android or iOS

flutter run - Flutter Windows

flutter run -d windows

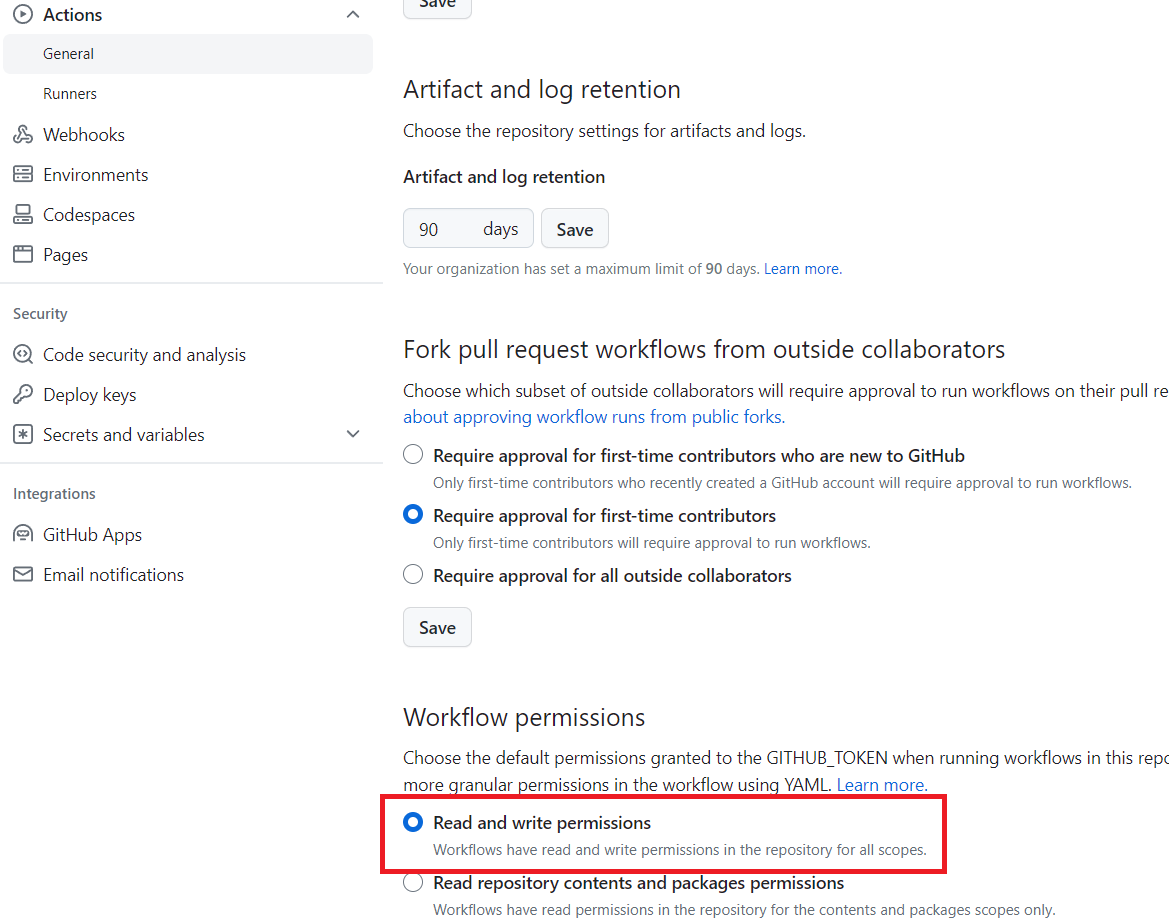

How to Deploy the Flutter App to GitHub Pages

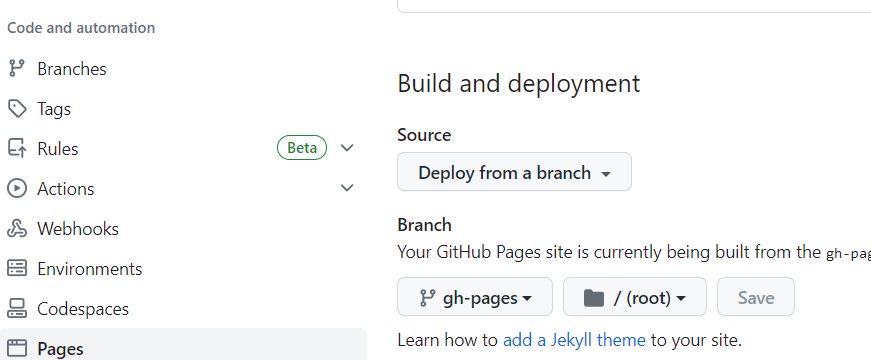

-

Grant the write permission to GitHub workflows:

- Create a GitHub workflow file

.github/workflows/main.yml. You need to replace thebaseHrefwith your own repository name:name: Build and Deploy Flutter Web on: push: branches: - main jobs: build: runs-on: ubuntu-latest steps: - uses: actions/checkout@v2 - name: Set up Flutter uses: subosito/flutter-action@v1 with: channel: 'stable' - name: Get dependencies run: flutter pub get - uses: bluefireteam/flutter-gh-pages@v7 with: baseHref: /flutter-camera-document-scanner/ -

Deploy the

gh-pagesbranch as the GitHub Pages source:

Source Code

https://github.com/yushulx/flutter-barcode-mrz-document-scanner/blob/main/examples/document_scanner