How to Enable Full-Text Search for Scanned Documents in a Web App

Full-text search is a process of examining all of the words in every stored document to find documents meeting a certain criteria (e.g. text specified by a user).

It is possible for the full-text-search engine to directly scan the contents of the documents if the number of documents is small. However, when the number of documents to search is potentially large, we have to index the documents first to improve the efficiency. This is what most full-text search engines like Lucene, Solr and Elasticsearch do.

In the previous article, we’ve built a web application to scan documents and run OCR with Dynamic Web TWAIN and Tesseract.js. In this article, we are going to add the ability to run full-text search to it with FlexSearch.

Install Dependencies

-

Install FlexSearch.

npm install flexsearch -

Install

localForageto save index to IndexedDB.npm install localForage

Create Index

The OCR results of document pages are saved using localForage with keys like the following, where timestamp is used as the ID of documents:

timestamp+"-OCR-Data"

The content of the OCR data is an object containing the OCR results produced by Tesseract with the document index as the key.

{

"0": {

"jobId": "Job-3-86bc4",

"data": {

"text": "| 8.5\ni ‘ 11\nTWAIN\nLinking Images With Applications\n"

}

}

}

We can create an index from the OCR results with the following code:

import { Index } from "flexsearch";

const documentIndex = new Index();

const keys = await localForage.keys();

const document = [];

for (const key of keys) {

if (key.indexOf("OCR-Data") != -1) { //OCR results stored as timestamp+"-OCR-Data"

const resultsDict = await localForage.getItem(key);

const timestamp = key.split("-")[0];

const pageIndices = Object.keys(resultsDict);

for (const i of pageIndices) {

const result = resultsDict[i];

if (result) {

const id = timestamp+"-"+i;

document.push({id:id,body:result.data.text}); //push document pages to an array first

}

}

}

}

document.forEach(({ id, body }) => {

if (id in Object.keys(documentIndex.register)) {

documentIndex.remove(id); // remove previously added document pages

}

documentIndex.add(id, body); //add document pages to index

});

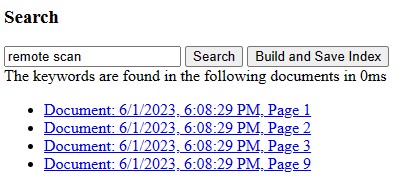

Perform Full-Text Search

Next, we can perform full-text search using the index created.

const query = "remote scan";

const results = documentIndex.search(query);

console.log(results); // output the IDs of document pages found.

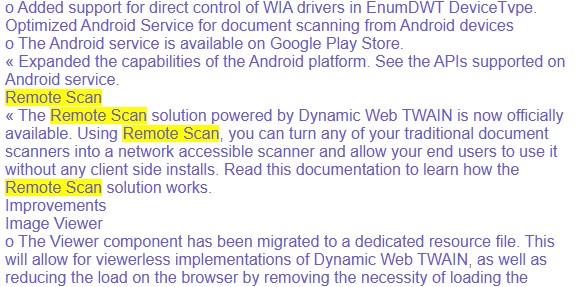

We can highlight all the matches with a yellow background. FlexSearch does not support this by default. We have to do this ourselves.

function highlightQuery(query){

let content = document.getElementsByClassName("text")[0].innerHTML;

// Build regex

const regexForContent = new RegExp(query, 'gi');

// Replace content where regex matches

content = content.replace(regexForContent, "<span class='hightlighted'>$&</span>");

document.getElementsByClassName("text")[0].innerHTML = content;

}

Export and Import of Index

We can export the index to persistent storage for future usage.

-

Export and save the index to IndexedDB.

let indexStore = localForage.createInstance({ name: "index" }); function SaveIndexToIndexedDB(){ return new Promise(function(resolve){ documentIndex.export(async function(key, data){ // do the saving as async await indexStore.setItem(key, data); resolve(); }); }); } -

Import the index from IndexedDB.

async function LoadIndexFromIndexedDB(){ const keys = await indexStore.keys(); for (const key of keys) { const content = await indexStore.getItem(key); documentIndex.import(key, content); } }

Source Code

Check out the code of the demo to have a try:

https://github.com/tony-xlh/Full-Text-Search-of-Scanned-Documents