How to Build a Cross-platform Document Scanner App with Flutter

A document scanner app is a software application that uses your device’s camera to capture images of physical documents and convert them into digital formats. These apps typically support scanning documents, photos, receipts, business cards, and more. Document scanner apps are useful across various industries, including education, business, finance and healthcare. You may already be familiar with popular apps like Adobe Scan, CamScanner, and Microsoft Office Lens. The goal of this article is to guide you through building your own cross-platform document scanner app using Flutter and Dynamsoft Capture Vision. With a single codebase, you’ll be able to create an app that runs on Windows, Linux, Android, iOS and Web.

This article is Part 4 in a 4-Part Series.

- Part 1 - How to Build a Document Scanner Web App Using JavaScript and Flutter

- Part 2 - Building a Flutter Document Scanning Plugin for Windows and Linux

- Part 3 - How to Create a Flutter Document Scanning Plugin for Android and iOS

- Part 4 - How to Build a Cross-platform Document Scanner App with Flutter

Demo Video

Comparing Flutter MRZ Scanners, Barcode Scanners, and Document Scanners

In previous projects, we developed both a Flutter MRZ scanner app and a Flutter barcode scanner app. All three apps share similar Flutter plugins and structure, with the main difference being the SDK used for specific tasks:

flutter_ocr_sdkfor MRZ detectionflutter_barcode_sdkfor barcode scanningflutter_document_scan_sdkfor document edge detection and perspective correction

Most of the UI code is reused across the apps. They all feature a tab bar for navigation between the home, and history pages. The camera control logic is consistent as well.

The document scanner app, however, adds a dedicated editing page for adjusting the perspective of scanned documents. Once adjusted, the document is cropped and rectified on a saving page, which also includes enhancement filters such as grayscale, black & white, and color.

Required Flutter Plugins

- flutter_document_scan_sdk: Wraps Dynamsoft Capture Vision SDK for cross-platform document scanning. A license key is required.

- image_picker: Captures or selects images and videos.

- shared_preferences: Stores simple key-value pairs persistently.

- camera: Handles camera preview and image/video capture.

- share_plus: Enables platform share functionality.

- url_launcher: Opens URLs using the default browser or apps.

- flutter_exif_rotation: Corrects image orientation based on EXIF data.

- flutter_lite_camera: Provides camera access on Windows, Linux, and macOS.

Getting Started with the App

-

Create a new Flutter project:

flutter create documentscanner -

Add dependencies in

pubspec.yaml:dependencies: flutter_document_scan_sdk: ^1.0.2 image_picker: ^1.0.0 shared_preferences: ^2.1.1 camera: git: url: https://github.com/yushulx/flutter_camera.git flutter_lite_camera: ^0.0.1 share_plus: ^7.0.2 url_launcher: ^6.1.11 flutter_exif_rotation: ^0.5.1 -

Create a

global.dartfile for global variables:import 'package:flutter_document_scan_sdk/flutter_document_scan_sdk.dart'; FlutterDocumentScanSdk docScanner = FlutterDocumentScanSdk(); bool isLicenseValid = false; Future<int> initDocumentSDK() async { int? ret = await docScanner.init( 'LICENSE-KEY'); if (ret == 0) isLicenseValid = true; return ret ?? -1; } -

Replace the contents of

lib/main.dartwith the following code:import 'package:flutter/material.dart'; import 'tab_page.dart'; import 'dart:async'; import 'global.dart'; Future<void> main() async { runApp(const MyApp()); } class MyApp extends StatelessWidget { const MyApp({super.key}); Future<int> loadData() async { return await initDocumentSDK(); } @override Widget build(BuildContext context) { return MaterialApp( title: 'Dynamsoft Barcode Detection', theme: ThemeData( scaffoldBackgroundColor: colorMainTheme, ), home: FutureBuilder<int>( future: loadData(), builder: (BuildContext context, AsyncSnapshot<int> snapshot) { if (!snapshot.hasData) { return const CircularProgressIndicator(); } Future.microtask(() { Navigator.pushReplacement(context, MaterialPageRoute(builder: (context) => const TabPage())); }); return Container(); }, ), ); } }

Building Document Scanner App Features

Refer to the UI design mockup:

In the following sections, we’ll explore the core features: edge detection, perspective correction, edge editing, and saving/exporting the rectified document.

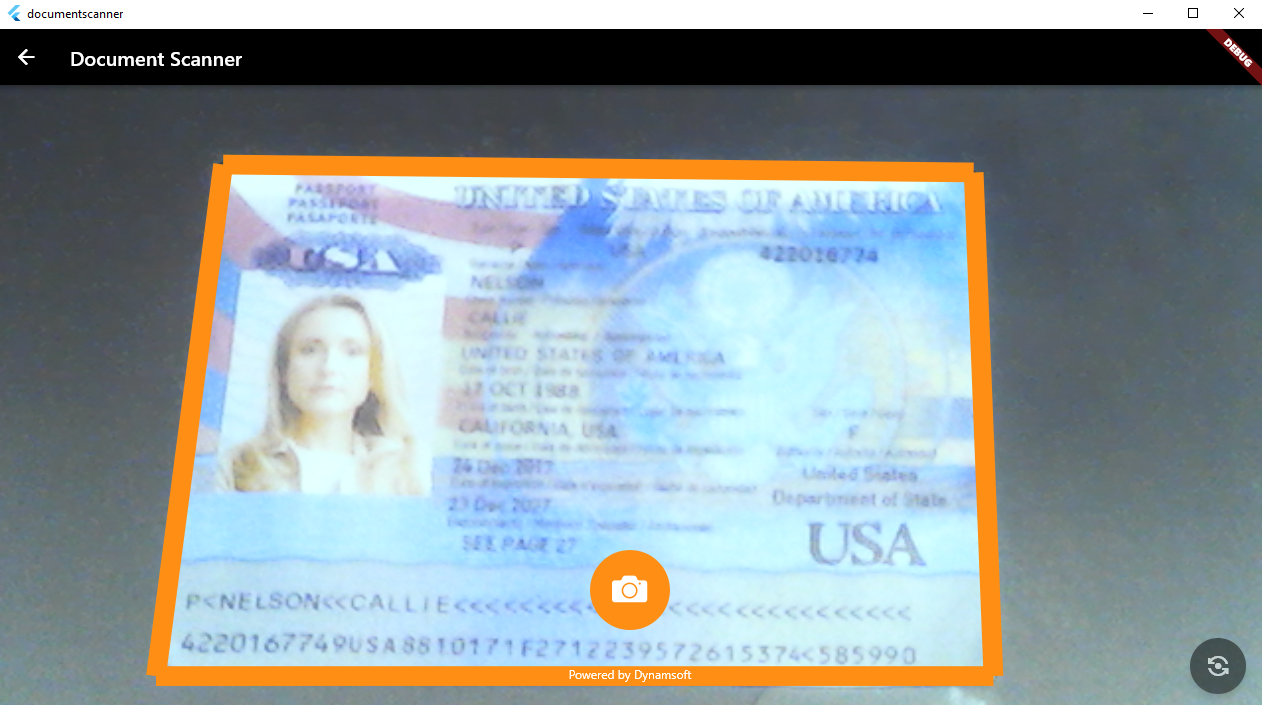

Document Edge Detection & Perspective Correction

The app supports two scanning methods: live detection via camera or static image processing. You can use detectBuffer() for a video stream, or detectFile() and decodeImageFromList() for a selected image file. Both methods return a Future<List<DocumentResult>?> object.

// Image file

XFile? photo = await picker.pickImage(source: ImageSource.gallery);

// detectFile()

var results = await docScanner.detectFile(photo.path);

// detectBuffer()

Uint8List fileBytes = await photo.readAsBytes();

ui.Image image = await decodeImageFromList(fileBytes);

ByteData? byteData =

await image.toByteData(format: ui.ImageByteFormat.rawRgba);

if (byteData != null) {

List<DocumentResult>? results = await docScanner.detectBuffer(

byteData.buffer.asUint8List(),

image.width,

image.height,

byteData.lengthInBytes ~/ image.height,

ImagePixelFormat.IPF_ARGB_8888.index,

ImageRotation.rotation0.value);

}

// Video streaming buffer

Future<void> processDocument(List<Uint8List> bytes, int width, int height,

List<int> strides, int format, List<int> pixelStrides) async {

int rotation = 0;

var results = docScanner

.detectBuffer(bytes[0], width, height, strides[0], format, rotation);

...

}

The DocumentResult object contains the following properties:

List<Offset> points: The coordinates of the quadrilateral.int confidence: The confidence of the result.

Once edges are detected, use normalizeFile() or normalizeBuffer() to crop and correct the document’s perspective.

// File

var normalizedImage = await docScanner.normalizeFile(file, points, ColorMode.COLOR);

// Buffer

Future<void> handleDocument(Uint8List bytes, int width, int height, int stride,

int format, dynamic points) async {

var normalizedImage = docScanner

.normalizeBuffer(bytes, width, height, stride, format, points,

ImageRotation.rotation90.value, ColorMode.COLOR);

}

Both methods return a NormalizedImage object that represents the rectified image:

class NormalizedImage {

/// Image data.

final Uint8List data;

/// Image width.

final int width;

/// Image height.

final int height;

NormalizedImage(this.data, this.width, this.height);

}

To display the image, decode Uint8List data to a ui.Image object with the decodeImageFromPixels() method:

decodeImageFromPixels(normalizedImage.data, normalizedImage.width,

normalizedImage.height, pixelFormat, (ui.Image img) {

});

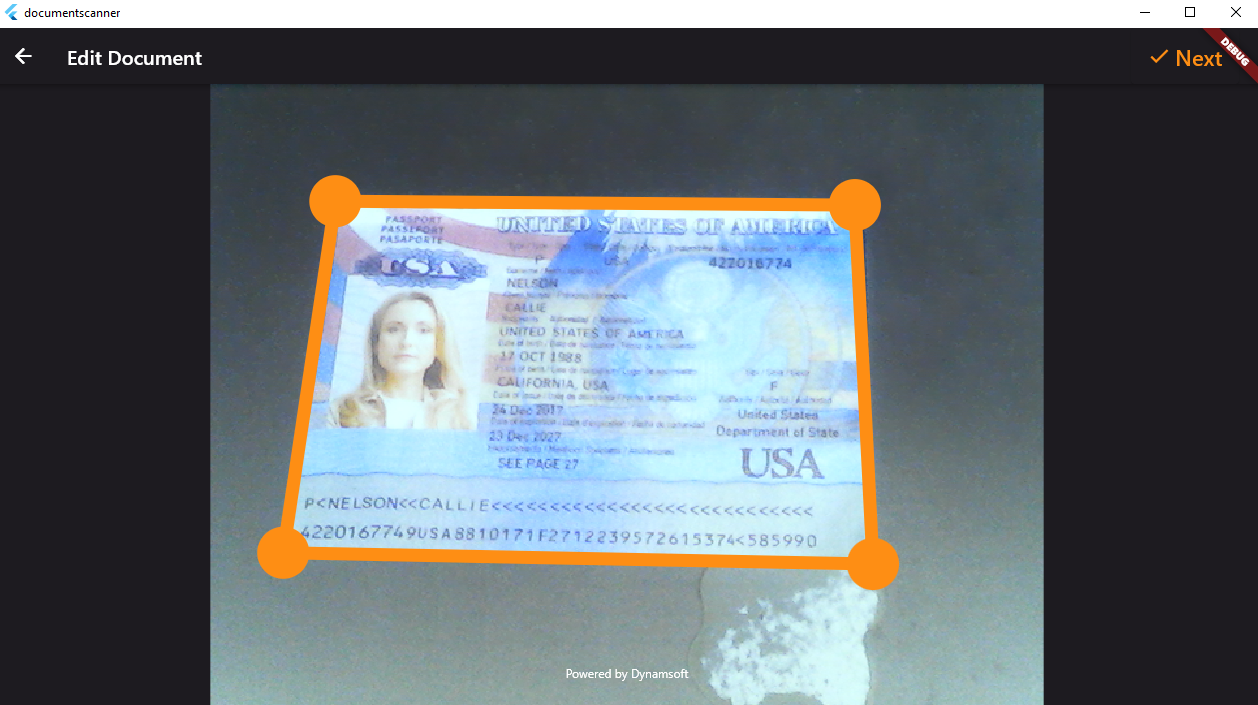

Edge Editing Page

Since auto-detection may not be perfect, an editing interface allows users to manually adjust the document’s quadrilateral. The UI uses CustomPaint, GestureDetector, and Stack to handle rendering and user interaction.

class OverlayPainter extends CustomPainter {

ui.Image? image;

List<DocumentResult>? results;

OverlayPainter(this.image, this.results);

@override

void paint(Canvas canvas, Size size) {

final paint = Paint()

..color = colorOrange

..strokeWidth = 10

..style = PaintingStyle.stroke;

if (image != null) {

canvas.drawImage(image!, Offset.zero, paint);

}

Paint circlePaint = Paint()

..color = colorOrange

..strokeWidth = 20

..style = PaintingStyle.fill;

if (results == null) return;

for (var result in results!) {

canvas.drawLine(result.points[0], result.points[1], paint);

canvas.drawLine(result.points[1], result.points[2], paint);

canvas.drawLine(result.points[2], result.points[3], paint);

canvas.drawLine(result.points[3], result.points[0], paint);

if (image != null) {

double radius = 20;

canvas.drawCircle(result.points[0], radius, circlePaint);

canvas.drawCircle(result.points[1], radius, circlePaint);

canvas.drawCircle(result.points[2], radius, circlePaint);

canvas.drawCircle(result.points[3], radius, circlePaint);

}

}

}

@override

bool shouldRepaint(OverlayPainter oldDelegate) => true;

}

Widget createCustomImage() {

var image = widget.documentData.image;

var detectionResults = widget.documentData.documentResults;

return FittedBox(

fit: BoxFit.contain,

child: SizedBox(

width: image!.width.toDouble(),

height: image.height.toDouble(),

child: GestureDetector(

onPanUpdate: (details) {

if (details.localPosition.dx < 0 ||

details.localPosition.dy < 0 ||

details.localPosition.dx > image.width ||

details.localPosition.dy > image.height) {

return;

}

for (int i = 0; i < detectionResults.length; i++) {

for (int j = 0; j < detectionResults[i].points.length; j++) {

if ((detectionResults[i].points[j] - details.localPosition)

.distance <

100) {

bool isCollided = false;

for (int index = 1; index < 4; index++) {

int otherIndex = (j + 1) % 4;

if ((detectionResults[i].points[otherIndex] -

details.localPosition)

.distance <

20) {

isCollided = true;

return;

}

}

setState(() {

if (!isCollided) {

detectionResults[i].points[j] = details.localPosition;

}

});

}

}

}

},

child: CustomPaint(

painter: OverlayPainter(image, detectionResults!),

),

)));

}

body: Stack(

children: <Widget>[

Positioned.fill(

child: createCustomImage(),

),

const Positioned(

left: 122,

right: 122,

bottom: 28,

child: Text('Powered by Dynamsoft',

textAlign: TextAlign.center,

style: TextStyle(

fontSize: 12,

color: Colors.white,

)),

),

],

),

Document Rectification & Saving Page

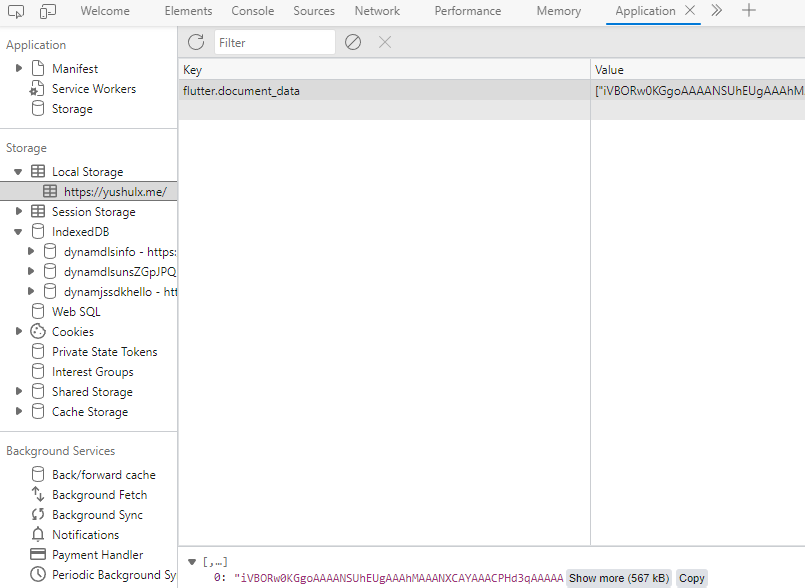

This page lets users view the final rectified image, apply filters (e.g., grayscale, binary, color), and save the output. Users can select the filter via a radio group and save the image as a base64 string using shared_preferences.

Widget createCustomImage(BuildContext context, ui.Image image,

List<DocumentResult> detectionResults) {

return FittedBox(

fit: BoxFit.contain,

child: SizedBox(

width: image.width.toDouble(),

height: image.height.toDouble(),

child: CustomPaint(

painter: OverlayPainter(image, detectionResults),

)));

}

<Widget>[

Theme(

data: Theme.of(context).copyWith(

unselectedWidgetColor:

Colors.white, // Color when unselected

),

child: Radio(

activeColor: colorOrange,

value: 'binary',

groupValue: _pixelFormat,

onChanged: (String? value) async {

setState(() {

_pixelFormat = value!;

});

await docScanner.setParameters(Template.binary);

if (widget.documentData.documentResults!.isNotEmpty) {

await normalizeBuffer(widget.documentData.image!,

widget.documentData.documentResults![0].points);

}

},

),

),

const Text('Binary', style: TextStyle(color: Colors.white)),

Theme(

data: Theme.of(context).copyWith(

unselectedWidgetColor:

Colors.white, // Color when unselected

),

child: Radio(

activeColor: colorOrange,

value: 'grayscale',

groupValue: _pixelFormat,

onChanged: (String? value) async {

setState(() {

_pixelFormat = value!;

});

await docScanner.setParameters(Template.grayscale);

if (widget.documentData.documentResults!.isNotEmpty) {

await normalizeBuffer(widget.documentData.image!,

widget.documentData.documentResults![0].points);

}

},

)),

const Text('Gray', style: TextStyle(color: Colors.white)),

Theme(

data: Theme.of(context).copyWith(

unselectedWidgetColor:

Colors.white, // Color when unselected

),

child: Radio(

activeColor: colorOrange,

value: 'color',

groupValue: _pixelFormat,

onChanged: (String? value) async {

setState(() {

_pixelFormat = value!;

});

await docScanner.setParameters(Template.color);

if (widget.documentData.documentResults!.isNotEmpty) {

await normalizeBuffer(widget.documentData.image!,

widget.documentData.documentResults![0].points);

}

},

)),

const Text('Color', style: TextStyle(color: Colors.white)),

]

ElevatedButton(

onPressed: () async {

String imageString =

await convertImagetoPngBase64(normalizedUiImage!);

final SharedPreferences prefs =

await SharedPreferences.getInstance();

var results = prefs.getStringList('document_data');

List<String> imageList = <String>[];

imageList.add(imageString);

if (results == null) {

prefs.setStringList('document_data', imageList);

} else {

results.addAll(imageList);

prefs.setStringList('document_data', results);

}

close();

},

style: ButtonStyle(

backgroundColor: MaterialStateProperty.all(colorMainTheme)),

child: Text('Save',

style: TextStyle(color: colorOrange, fontSize: 22)),

)

Known Issues on Flutter Web

⚠️ Web Local Storage Size Limitation

When saving images using shared_preferences, data is stored in the browser’s local storage, which is typically limited to 5MB. Attempting to store large or many base64-encoded images may result in app crashes or unexpected behavior.

Source Code

https://github.com/yushulx/flutter_document_scan_sdk/tree/main/example