How to Create a Flutter Document Scanning Plugin for Android and iOS

Previously, we created a Flutter document scanning plugin for web, Windows, and Linux. In this article, we’ll add support for Android and iOS, enabling you to build a cross-platform document scanning app that corrects the perspective of captured documents on all major platforms.

Demo Video: Flutter Document Scanner for iOS

This article is Part 3 in a 4-Part Series.

- Part 1 - How to Build a Document Scanner Web App Using JavaScript and Flutter

- Part 2 - Building a Flutter Document Scanning Plugin for Windows and Linux

- Part 3 - How to Create a Flutter Document Scanning Plugin for Android and iOS

- Part 4 - How to Build a Cross-platform Document Scanner App with Flutter

Flutter Document Rectification SDK

https://pub.dev/packages/flutter_document_scan_sdk

Adding Android and iOS Support to the Flutter Plugin

To add support for Android and iOS, run the following command in the root directory of your plugin project:

flutter create --org com.dynamsoft --template=plugin --platforms=android,ios .

After generating the platform-specific code, update the pubspec.yaml file to register each platform implementation:

plugin:

platforms:

android:

package: com.dynamsoft.flutter_document_scan_sdk

pluginClass: FlutterDocumentScanSdkPlugin

ios:

pluginClass: FlutterDocumentScanSdkPlugin

linux:

pluginClass: FlutterDocumentScanSdkPlugin

windows:

pluginClass: FlutterDocumentScanSdkPluginCApi

web:

pluginClass: FlutterDocumentScanSdkWeb

fileName: flutter_document_scan_sdk_web.dart

Since the Dart APIs remain unchanged, you only need to implement the native platform logic for Android and iOS.

Linking Third-party Libraries for Android and iOS

Configuring the Dynamsoft Capture Vision SDK with Gradle and CocoaPods

For Android, modify android/build.gradle to include the Dynamsoft Maven repository and dependency.

rootProject.allprojects {

repositories {

maven {

url "https://download2.dynamsoft.com/maven/aar"

}

google()

mavenCentral()

}

}

dependencies {

implementation "com.dynamsoft:capturevisionbundle:3.0.3000"

}

For iOS, add the appropriate dependency to ios/flutter_document_scan_sdk.podspec.

s.dependency 'DynamsoftCaptureVisionBundle', '3.0.3000'

Writing Platform-specific Code in Java and Swift

We write Java code in the FlutterDocumentScanSdkPlugin.java file and Swift code in the SwiftFlutterDocumentScanSdkPlugin.swift file.

-

Import the necessary SDK classes for both platforms.

Android

import com.dynamsoft.cvr.EnumPresetTemplate; import com.dynamsoft.cvr.CapturedResult; import com.dynamsoft.cvr.CaptureVisionRouter; import com.dynamsoft.cvr.SimplifiedCaptureVisionSettings; import com.dynamsoft.cvr.CaptureVisionRouterException; import com.dynamsoft.license.LicenseManager; import com.dynamsoft.core.basic_structures.CapturedResultItem; import com.dynamsoft.core.basic_structures.ImageData; import com.dynamsoft.core.basic_structures.Quadrilateral; import com.dynamsoft.core.basic_structures.EnumImagePixelFormat; import com.dynamsoft.ddn.NormalizedImageResultItem; import com.dynamsoft.ddn.DetectedQuadResultItem; import com.dynamsoft.ddn.NormalizedImagesResult; import com.dynamsoft.ddn.DetectedQuadsResult; import com.dynamsoft.ddn.EnumImageColourMode;iOS

import DynamsoftCaptureVisionBundle -

Create an instance of

CaptureVisionRouterto manage scanning operations.Android

private CaptureVisionRouter mRouter; private void checkInstantce() { if (mRouter == null) { mRouter = new CaptureVisionRouter(activity); } }iOS

let cvr = CaptureVisionRouter() -

Initialize the SDK with a license key using LicenseManager.

Android

LicenseManager.initLicense( license, activity, (isSuccess, error) -> { if (!isSuccess) { result.success(-1); } else { result.success(0); } });The

initLicense()method requires theActivityobject as its second parameter. To obtain theActivityin a Flutter Android plugin, we implement theActivityAwareinterface. TheFlutterDocumentScanSdkPluginclass implements this interface, where theonAttachedToActivitymethod is called when the Activity is available, andonDetachedFromActivityis called when the Activity is no longer accessible.public class FlutterDocumentScanSdkPlugin implements FlutterPlugin, MethodCallHandler, ActivityAware { private void bind(ActivityPluginBinding activityPluginBinding) { activity = activityPluginBinding.getActivity(); } @Override public void onAttachedToActivity(ActivityPluginBinding activityPluginBinding) { bind(activityPluginBinding); } @Override public void onDetachedFromActivity() { activity = null; } }iOS

public class SwiftFlutterDocumentScanSdkPlugin: NSObject, FlutterPlugin, LicenseVerificationListener { ... LicenseManager.initLicense(license, verificationDelegate: self) ... public func onLicenseVerified(_ isSuccess: Bool, error: Error?) { if isSuccess { completionHandlers.first?(0) } else { completionHandlers.first?(-1) } } } -

Capture and detect document edges using the

capture()method and convert the result into a Dart-compatible structure.Android

List<Map<String, Object>> createContourList(CapturedResult result) { List<Map<String, Object>> out = new ArrayList<>(); if (result != null && result.getItems().length > 0) { CapturedResultItem[] items = result.getItems(); for (CapturedResultItem item : items) { if (item instanceof DetectedQuadResultItem) { Map<String, Object> map = new HashMap<>(); DetectedQuadResultItem quadItem = (DetectedQuadResultItem) item; int confidence = quadItem.getConfidenceAsDocumentBoundary(); Point[] points = quadItem.getLocation().points; int x1 = points[0].x; int y1 = points[0].y; int x2 = points[1].x; int y2 = points[1].y; int x3 = points[2].x; int y3 = points[2].y; int x4 = points[3].x; int y4 = points[3].y; map.put("confidence", confidence); map.put("x1", x1); map.put("y1", y1); map.put("x2", x2); map.put("y2", y2); map.put("x3", x3); map.put("y3", y3); map.put("x4", x4); map.put("y4", y4); out.add(map); } } } return out; } ImageData imageData = new ImageData(); imageData.bytes = bytes; imageData.width = width; imageData.height = height; imageData.stride = stride; imageData.format = format; imageData.orientation = rotation; List<Map<String, Object>> tmp = new ArrayList<>(); CapturedResult results = mRouter.capture(imageData, EnumPresetTemplate.PT_DETECT_DOCUMENT_BOUNDARIES); tmp = createContourList(results);iOS

public func createContourList(_ result: CapturedResult) -> NSMutableArray { let out = NSMutableArray() if let item = result.items?.first, item.type == .detectedQuad { let detectedItem:DetectedQuadResultItem = item as! DetectedQuadResultItem let dictionary = NSMutableDictionary() let confidence = detectedItem.confidenceAsDocumentBoundary let points = detectedItem.location.points as! [CGPoint] dictionary.setObject(confidence, forKey: "confidence" as NSCopying) dictionary.setObject(Int(points[0].x), forKey: "x1" as NSCopying) dictionary.setObject(Int(points[0].y), forKey: "y1" as NSCopying) dictionary.setObject(Int(points[1].x), forKey: "x2" as NSCopying) dictionary.setObject(Int(points[1].y), forKey: "y2" as NSCopying) dictionary.setObject(Int(points[2].x), forKey: "x3" as NSCopying) dictionary.setObject(Int(points[2].y), forKey: "y3" as NSCopying) dictionary.setObject(Int(points[3].x), forKey: "x4" as NSCopying) dictionary.setObject(Int(points[3].y), forKey: "y4" as NSCopying) out.add(dictionary) } return out } var out = NSMutableArray() let buffer: FlutterStandardTypedData = arguments.value(forKey: "bytes") as! FlutterStandardTypedData let width: Int = arguments.value(forKey: "width") as! Int let height: Int = arguments.value(forKey: "height") as! Int let stride: Int = arguments.value(forKey: "stride") as! Int let format: Int = arguments.value(forKey: "format") as! Int let rotation: Int = arguments.value(forKey: "rotation") as! Int let enumImagePixelFormat = ImagePixelFormat(rawValue: format) let imageData = ImageData.init() imageData.bytes = buffer.data imageData.width = UInt(width) imageData.height = UInt(height) imageData.stride = UInt(stride) imageData.format = enumImagePixelFormat! imageData.orientation = rotation let detectedResults = self.cvr.captureFromBuffer(imageData, templateName: "DetectDocumentBoundaries_Default") out = self.createContourList(detectedResults) -

Normalize the document using detected corner coordinates to perform perspective correction.

Android

Map<String, Object> map = new HashMap<>(); final byte[] bytes = call.argument("bytes"); final int width = call.argument("width"); final int height = call.argument("height"); final int stride = call.argument("stride"); final int format = call.argument("format"); final int x1 = call.argument("x1"); final int y1 = call.argument("y1"); final int x2 = call.argument("x2"); final int y2 = call.argument("y2"); final int x3 = call.argument("x3"); final int y3 = call.argument("y3"); final int x4 = call.argument("x4"); final int y4 = call.argument("y4"); final int rotation = call.argument("rotation"); final int mode = call.argument("color"); ImageData buffer = new ImageData(); buffer.bytes = bytes; buffer.width = width; buffer.height = height; buffer.stride = stride; buffer.format = format; buffer.orientation = rotation; try { Quadrilateral quad = new Quadrilateral(); quad.points = new Point[4]; quad.points[0] = new Point(x1, y1); quad.points[1] = new Point(x2, y2); quad.points[2] = new Point(x3, y3); quad.points[3] = new Point(x4, y4); SimplifiedCaptureVisionSettings settings = mRouter.getSimplifiedSettings(EnumPresetTemplate.PT_NORMALIZE_DOCUMENT); settings.roi = quad; settings.roiMeasuredInPercentage = false; settings.documentSettings.colourMode = mode; mRouter.updateSettings(EnumPresetTemplate.PT_NORMALIZE_DOCUMENT, settings); CapturedResult data = mRouter.capture(buffer, EnumPresetTemplate.PT_NORMALIZE_DOCUMENT); map = createNormalizedImage(data);iOS

let arguments: NSDictionary = call.arguments as! NSDictionary let buffer: FlutterStandardTypedData = arguments.value(forKey: "bytes") as! FlutterStandardTypedData let width: Int = arguments.value(forKey: "width") as! Int let height: Int = arguments.value(forKey: "height") as! Int let stride: Int = arguments.value(forKey: "stride") as! Int let format: Int = arguments.value(forKey: "format") as! Int let enumImagePixelFormat = ImagePixelFormat(rawValue: format) let x1: Int = arguments.value(forKey: "x1") as! Int let y1: Int = arguments.value(forKey: "y1") as! Int let x2: Int = arguments.value(forKey: "x2") as! Int let y2: Int = arguments.value(forKey: "y2") as! Int let x3: Int = arguments.value(forKey: "x3") as! Int let y3: Int = arguments.value(forKey: "y3") as! Int let x4: Int = arguments.value(forKey: "x4") as! Int let y4: Int = arguments.value(forKey: "y4") as! Int let rotation: Int = arguments.value(forKey: "rotation") as! Int let colorMode: Int = arguments.value(forKey: "color") as! Int let imageData = ImageData() imageData.bytes = buffer.data imageData.width = UInt(width) imageData.height = UInt(height) imageData.stride = UInt(stride) imageData.format = enumImagePixelFormat! imageData.orientation = rotation let points = [ CGPoint(x: x1, y: y1), CGPoint(x: x2, y: y2), CGPoint(x: x3, y: y3), CGPoint(x: x4, y: y4), ] let quad = Quadrilateral.init(pointArray: points) var mode = ImageColourMode.colour switch colorMode { case 0: mode = ImageColourMode.colour case 1: mode = ImageColourMode.grayscale case 2: mode = ImageColourMode.binary default: mode = ImageColourMode.colour } if let settings = try? self.cvr.getSimplifiedSettings("NormalizeDocument_Default") { settings.documentSettings?.colourMode = mode settings.roi = quad settings.roiMeasuredInPercentage = false try? self.cvr.updateSettings("NormalizeDocument_Default", settings: settings) } DispatchQueue.global().async { let normalizedResults = self.cvr.captureFromBuffer(imageData, templateName: "NormalizeDocument_Default") let dictionary = self.createNormalizedImage(normalizedResults) result(dictionary) } -

Convert the output image (e.g.,

RGB,grayscale, orbinary) to anRGBAformat suitable for rendering in Flutter.Android

Map<String, Object> createNormalizedImage(CapturedResult result) { NormalizedImagesResult normalizedImageResult = result.getNormalizedImagesResult(); Map<String, Object> map = new HashMap<>(); if (normalizedImageResult.getItems().length > 0) { NormalizedImageResultItem item = normalizedImageResult.getItems()[0]; ImageData imageData = item.getImageData(); int width = imageData.width; int height = imageData.height; int stride = imageData.stride; int format = imageData.format; byte[] data = imageData.bytes; int length = imageData.bytes.length; int orientation = imageData.orientation; map.put("width", width); map.put("height", height); map.put("stride", stride); map.put("format", format); map.put("orientation", orientation); map.put("length", length); byte[] rgba = new byte[width * height * 4]; if (format == EnumImagePixelFormat.IPF_RGB_888) { int dataIndex = 0; for (int i = 0; i < height; i++) { for (int j = 0; j < width; j++) { int index = i * width + j; rgba[index * 4] = data[dataIndex]; // red rgba[index * 4 + 1] = data[dataIndex + 1]; // green rgba[index * 4 + 2] = data[dataIndex + 2]; // blue rgba[index * 4 + 3] = (byte)255; // alpha dataIndex += 3; } } } else if (format == EnumImagePixelFormat.IPF_GRAYSCALED | format == EnumImagePixelFormat.IPF_BINARY_8_INVERTED | format == EnumImagePixelFormat.IPF_BINARY_8) { int dataIndex = 0; for (int i = 0; i < height; i++) { for (int j = 0; j < width; j++) { int index = i * width + j; rgba[index * 4] = data[dataIndex]; rgba[index * 4 + 1] = data[dataIndex]; rgba[index * 4 + 2] = data[dataIndex]; rgba[index * 4 + 3] = (byte)255; dataIndex += 1; } } } else if (format == EnumImagePixelFormat.IPF_BINARY) { byte[] grayscale = new byte[width * height]; binary2grayscale(data, grayscale, width, height, stride, length); int dataIndex = 0; for (int i = 0; i < height; i++) { for (int j = 0; j < width; j++) { int index = i * width + j; rgba[index * 4] = grayscale[dataIndex]; rgba[index * 4 + 1] = grayscale[dataIndex]; rgba[index * 4 + 2] = grayscale[dataIndex]; rgba[index * 4 + 3] = (byte)255; dataIndex += 1; } } } map.put("data", rgba); } return map; }iOS

public func createNormalizedImage(_ result: CapturedResult) -> NSMutableDictionary { let dictionary = NSMutableDictionary() if let item = result.items?.first, item.type == .normalizedImage { let imageItem : NormalizedImageResultItem = item as! NormalizedImageResultItem let imageData = imageItem.imageData let width = imageData!.width let height = imageData!.height let stride = imageData!.stride let format = imageData!.format let data = imageData!.bytes let length = data.count let orientation = imageData!.orientation dictionary.setObject(width, forKey: "width" as NSCopying) dictionary.setObject(height, forKey: "height" as NSCopying) dictionary.setObject(stride, forKey: "stride" as NSCopying) dictionary.setObject(format.rawValue, forKey: "format" as NSCopying) dictionary.setObject(orientation, forKey: "orientation" as NSCopying) dictionary.setObject(length, forKey: "length" as NSCopying) var rgba: [UInt8] = [UInt8](repeating: 0, count: Int(width * height) * 4) if format == ImagePixelFormat.RGB888 { var dataIndex = 0 for i in 0..<height { for j in 0..<width { let index = i * width + j rgba[Int(index) * 4] = data[dataIndex] // red rgba[Int(index) * 4 + 1] = data[dataIndex + 1] // green rgba[Int(index) * 4 + 2] = data[dataIndex + 2] // blue rgba[Int(index) * 4 + 3] = 255 // alpha dataIndex += 3 } } } else if format == ImagePixelFormat.grayScaled || format == ImagePixelFormat.binaryInverted || format == ImagePixelFormat.binary8 { var dataIndex = 0 for i in 0..<height { for j in 0..<width { let index = i * width + j rgba[Int(index) * 4] = data[dataIndex] rgba[Int(index) * 4 + 1] = data[dataIndex] rgba[Int(index) * 4 + 2] = data[dataIndex] rgba[Int(index) * 4 + 3] = 255 dataIndex += 1 } } } else if format == ImagePixelFormat.binary { var grayscale: [UInt8] = [UInt8](repeating: 0, count: Int(width * height)) var index = 0 let skip = stride * 8 - width var shift = 0 var n = 1 for i in 0..<length { let b = data[i] var byteCount = 7 while byteCount >= 0 { let tmp = (b & (1 << byteCount)) >> byteCount if shift < stride * 8 * UInt(n) - skip { if tmp == 1 { grayscale[index] = 255 } else { grayscale[index] = 0 } index += 1 } byteCount -= 1 shift += 1 } if shift == Int(stride) * 8 * n { n += 1 } } var dataIndex = 0 for i in 0..<height { for j in 0..<width { let index = i * width + j rgba[Int(index) * 4] = grayscale[dataIndex] rgba[Int(index) * 4 + 1] = grayscale[dataIndex] rgba[Int(index) * 4 + 2] = grayscale[dataIndex] rgba[Int(index) * 4 + 3] = 255 dataIndex += 1 } } } dictionary.setObject(rgba, forKey: "data" as NSCopying) } return dictionary }

Testing the Flutter Document Scan Plugin on Android and iOS

You can run your Flutter app on Android and iOS without modifying the Dart interface.

flutter run

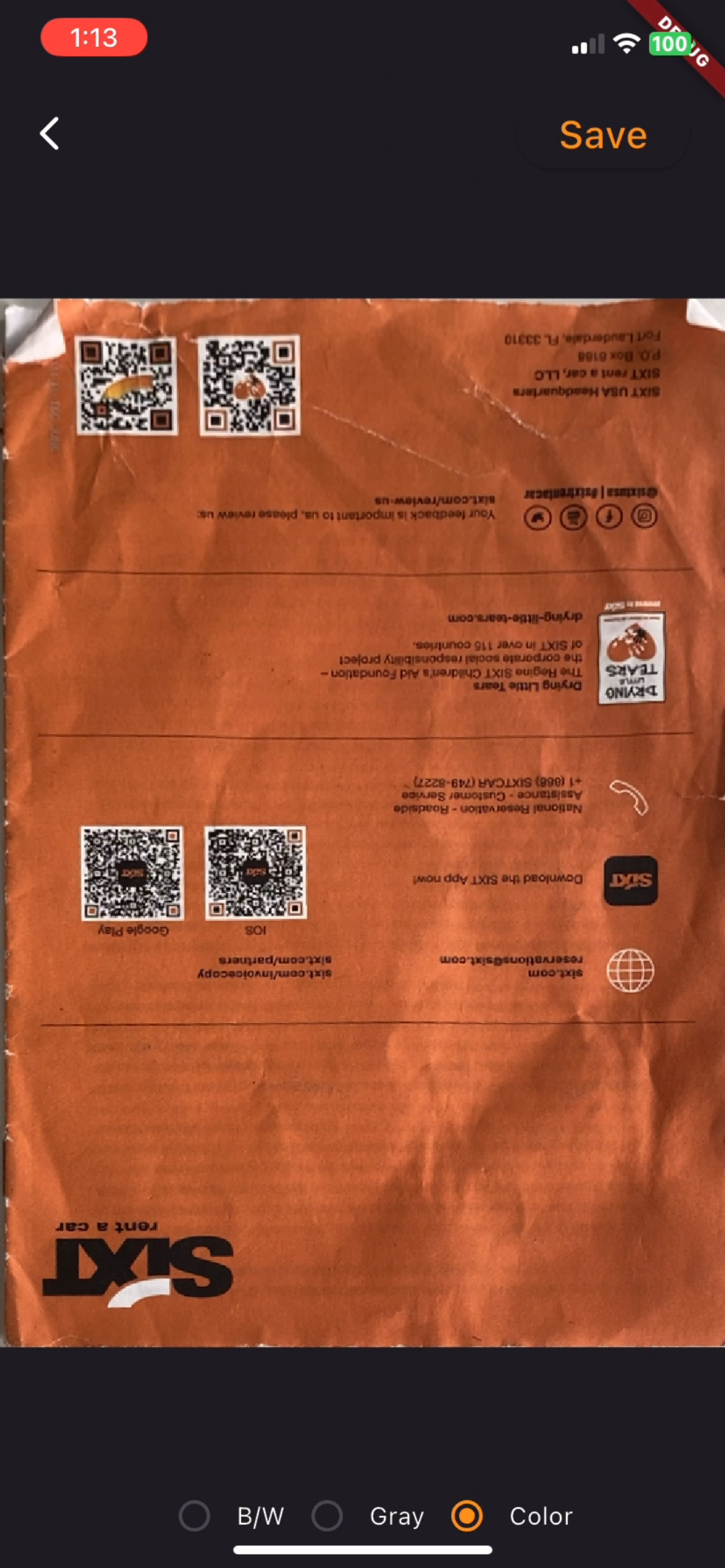

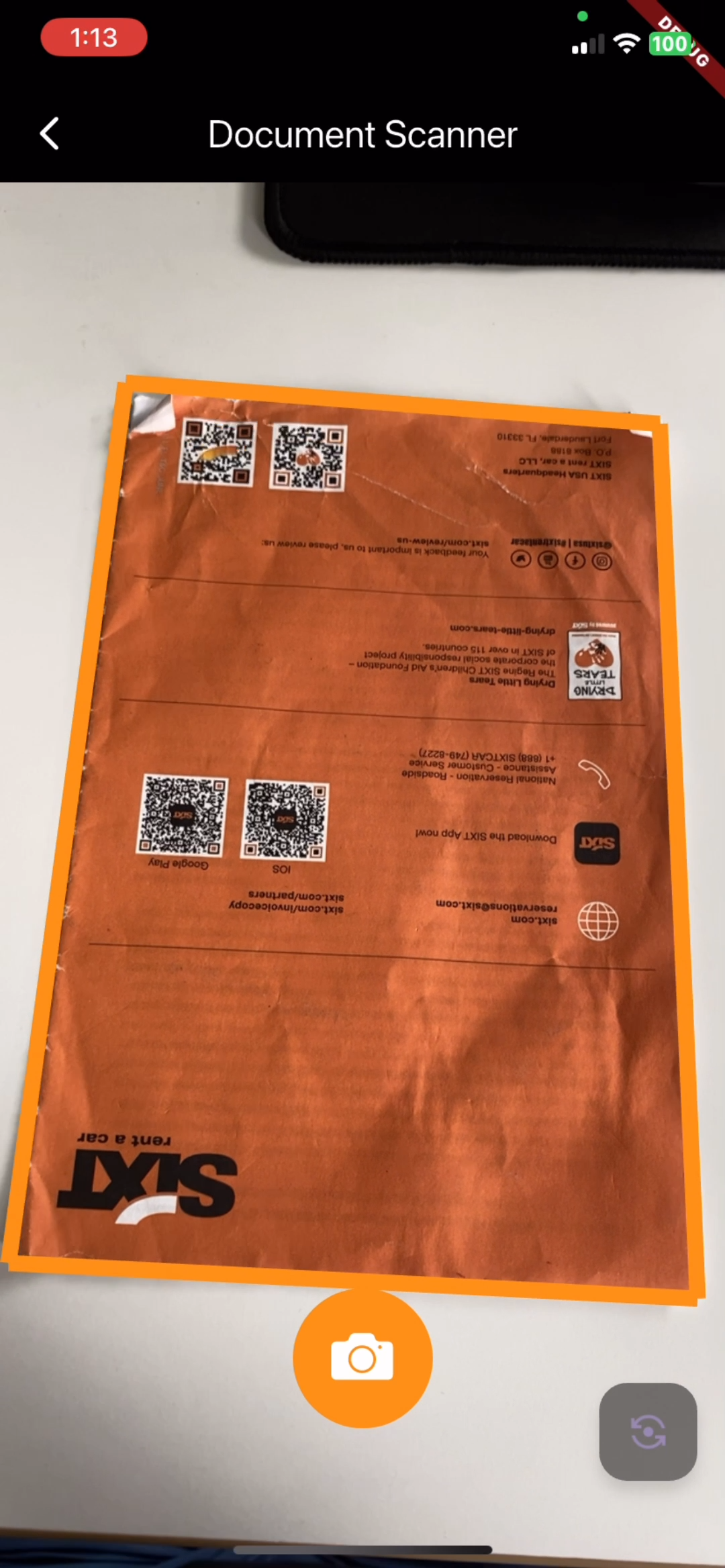

Document Edge Detection

Document Perspective Correction