Building a Flutter Document Scanning Plugin for Windows and Linux

Last week, we completed a Flutter document scanning plugin for the web — a great starting point. This week, we’re extending its capabilities to the desktop by integrating the Dynamsoft Capture Vision C++ SDK. The plugin will now support both Windows and Linux platforms. In this article, you’ll learn the steps to build a Flutter desktop document scanning plugin and explore the key differences in Flutter C++ implementation between Windows and Linux.

This article is Part 2 in a 4-Part Series.

- Part 1 - How to Build a Document Scanner Web App Using JavaScript and Flutter

- Part 2 - Building a Flutter Document Scanning Plugin for Windows and Linux

- Part 3 - How to Create a Flutter Document Scanning Plugin for Android and iOS

- Part 4 - How to Build a Cross-platform Document Scanner App with Flutter

Demo Video: Flutter Document Scanner for Windows

Flutter Document Scan SDK

https://pub.dev/packages/flutter_document_scan_sdk

Adding Windows and Linux Support to the Flutter Plugin

To enable Windows and Linux support in your plugin project, run the following command in the root directory:

flutter create --org com.dynamsoft --template=plugin --platforms=windows,linux .

Once the platform-specific code is generated, update the pubspec.yaml file to register the plugin implementations for each platform.

plugin:

platforms:

linux:

pluginClass: FlutterDocumentScanSdkPlugin

windows:

pluginClass: FlutterDocumentScanSdkPluginCApi

web:

pluginClass: FlutterDocumentScanSdkWeb

fileName: flutter_document_scan_sdk_web.dart

You may have noticed that the Flutter plugin project contains two key files: flutter_document_scan_sdk_web.dart and flutter_document_scan_sdk_method_channel.dart files. The former is used for the web implementation, which we completed last week. The latter handles mobile and desktop platforms using method channels to communicate with native code.

class MethodChannelFlutterDocumentScanSdk

extends FlutterDocumentScanSdkPlatform {

@visibleForTesting

final methodChannel = const MethodChannel('flutter_document_scan_sdk');

@override

Future<int?> init(String key) async {

return await methodChannel.invokeMethod<int>('init', {'key': key});

}

@override

Future<List<DocumentResult>> detectFile(String file) async {

List? results = await methodChannel.invokeListMethod<dynamic>(

'detectFile',

{'file': file},

);

return _resultWrapper(results);

}

@override

Future<List<DocumentResult>> detectBuffer(Uint8List bytes, int width,

int height, int stride, int format, int rotation) async {

List? results = await methodChannel.invokeListMethod<dynamic>(

'detectBuffer',

{

'bytes': bytes,

'width': width,

'height': height,

'stride': stride,

'format': format,

'rotation': rotation

},

);

return _resultWrapper(results);

}

List<DocumentResult> _resultWrapper(List<dynamic>? results) {

List<DocumentResult> output = [];

if (results != null) {

for (var result in results) {

int confidence = result['confidence'];

List<Offset> offsets = [];

int x1 = result['x1'];

int y1 = result['y1'];

int x2 = result['x2'];

int y2 = result['y2'];

int x3 = result['x3'];

int y3 = result['y3'];

int x4 = result['x4'];

int y4 = result['y4'];

offsets.add(Offset(x1.toDouble(), y1.toDouble()));

offsets.add(Offset(x2.toDouble(), y2.toDouble()));

offsets.add(Offset(x3.toDouble(), y3.toDouble()));

offsets.add(Offset(x4.toDouble(), y4.toDouble()));

DocumentResult documentResult = DocumentResult(confidence, offsets);

output.add(documentResult);

}

}

return output;

}

@override

Future<NormalizedImage?> normalizeFile(

String file, List<Offset> points, ColorMode color) async {

Offset offset = points[0];

int x1 = offset.dx.toInt();

int y1 = offset.dy.toInt();

offset = points[1];

int x2 = offset.dx.toInt();

int y2 = offset.dy.toInt();

offset = points[2];

int x3 = offset.dx.toInt();

int y3 = offset.dy.toInt();

offset = points[3];

int x4 = offset.dx.toInt();

int y4 = offset.dy.toInt();

Map? result = await methodChannel.invokeMapMethod<String, dynamic>(

'normalizeFile',

{

'file': file,

'x1': x1,

'y1': y1,

'x2': x2,

'y2': y2,

'x3': x3,

'y3': y3,

'x4': x4,

'y4': y4,

'color': color.index,

},

);

if (result != null) {

var data = result['data'];

if (data is List) {

return NormalizedImage(

Uint8List.fromList(data.cast<int>()),

result['width'],

result['height'],

);

}

return NormalizedImage(

data,

result['width'],

result['height'],

);

}

return null;

}

@override

Future<NormalizedImage?> normalizeBuffer(

Uint8List bytes,

int width,

int height,

int stride,

int format,

List<Offset> points,

int rotation,

ColorMode color) async {

Offset offset = points[0];

int x1 = offset.dx.toInt();

int y1 = offset.dy.toInt();

offset = points[1];

int x2 = offset.dx.toInt();

int y2 = offset.dy.toInt();

offset = points[2];

int x3 = offset.dx.toInt();

int y3 = offset.dy.toInt();

offset = points[3];

int x4 = offset.dx.toInt();

int y4 = offset.dy.toInt();

Map? result = await methodChannel.invokeMapMethod<String, dynamic>(

'normalizeBuffer',

{

'bytes': bytes,

'width': width,

'height': height,

'stride': stride,

'format': format,

'x1': x1,

'y1': y1,

'x2': x2,

'y2': y2,

'x3': x3,

'y3': y3,

'x4': x4,

'y4': y4,

'rotation': rotation,

'color': color.index,

},

);

if (result != null) {

var data = result['data'];

if (data is List) {

return NormalizedImage(

Uint8List.fromList(data.cast<int>()),

result['width'],

result['height'],

);

}

return NormalizedImage(

data,

result['width'],

result['height'],

);

}

return null;

}

}

Linking Libraries and Writing C/C++ Code

Linking Shared Libraries in a Flutter Plugin

Flutter uses CMake to build plugins for Windows and Linux. The first step is to set up the CMakeLists.txt file by configuring link_directories, target_link_libraries, and flutter_document_scan_sdk_bundled_libraries.

On Windows, you specify the library search path and link against the required Dynamsoft libraries.

cmake_minimum_required(VERSION 3.14)

set(PROJECT_NAME "flutter_document_scan_sdk")

project(${PROJECT_NAME} LANGUAGES CXX)

set(PLUGIN_NAME "flutter_document_scan_sdk_plugin")

link_directories("${PROJECT_SOURCE_DIR}/lib/")

list(APPEND PLUGIN_SOURCES

"flutter_document_scan_sdk_plugin.cpp"

"flutter_document_scan_sdk_plugin.h"

)

add_library(${PLUGIN_NAME} SHARED

"include/flutter_document_scan_sdk/flutter_document_scan_sdk_plugin_c_api.h"

"flutter_document_scan_sdk_plugin_c_api.cpp"

${PLUGIN_SOURCES}

)

apply_standard_settings(${PLUGIN_NAME})

set_target_properties(${PLUGIN_NAME} PROPERTIES

CXX_VISIBILITY_PRESET hidden)

target_compile_definitions(${PLUGIN_NAME} PRIVATE FLUTTER_PLUGIN_IMPL)

target_include_directories(${PLUGIN_NAME} INTERFACE

"${CMAKE_CURRENT_SOURCE_DIR}/include")

target_compile_options(${PLUGIN_NAME} PRIVATE /wd4121 /W3 /WX-)

target_link_libraries(${PLUGIN_NAME} PRIVATE flutter flutter_wrapper_plugin "DynamsoftCorex64" "DynamsoftLicensex64" "DynamsoftCaptureVisionRouterx64" "DynamsoftUtilityx64")

set(flutter_document_scan_sdk_bundled_libraries

"${PROJECT_SOURCE_DIR}/bin/"

"${PROJECT_SOURCE_DIR}/../resources/Templates"

PARENT_SCOPE

)

On Linux, in addition to linking the necessary shared libraries, you must specify the runtime path (rpath) and use the install() command to copy shared libraries to the output lib folder.

cmake_minimum_required(VERSION 3.10)

set(PROJECT_NAME "flutter_document_scan_sdk")

project(${PROJECT_NAME} LANGUAGES CXX)

set(PLUGIN_NAME "flutter_document_scan_sdk_plugin")

link_directories("${CMAKE_CURRENT_SOURCE_DIR}/lib")

add_library(${PLUGIN_NAME} SHARED

"flutter_document_scan_sdk_plugin.cc"

)

apply_standard_settings(${PLUGIN_NAME})

set_target_properties(${PLUGIN_NAME} PROPERTIES

CXX_VISIBILITY_PRESET hidden)

target_compile_definitions(${PLUGIN_NAME} PRIVATE FLUTTER_PLUGIN_IMPL)

target_include_directories(${PLUGIN_NAME} INTERFACE

"${CMAKE_CURRENT_SOURCE_DIR}/include")

target_link_libraries(${PLUGIN_NAME} PRIVATE flutter "DynamsoftCore" "DynamsoftLicense" "DynamsoftCaptureVisionRouter" "DynamsoftUtility")

target_link_libraries(${PLUGIN_NAME} PRIVATE PkgConfig::GTK)

set_target_properties(${PLUGIN_NAME} PROPERTIES

INSTALL_RPATH "$ORIGIN"

BUILD_RPATH "$ORIGIN"

)

set(flutter_document_scan_sdk_bundled_libraries

""

PARENT_SCOPE

)

install(DIRECTORY

"${PROJECT_SOURCE_DIR}/../linux/lib/"

DESTINATION "${CMAKE_INSTALL_PREFIX}/lib/"

)

install(DIRECTORY

"${PROJECT_SOURCE_DIR}/../resources/Templates"

DESTINATION "${CMAKE_INSTALL_PREFIX}/lib/"

)

Implementing Flutter C/C++ Code Logic for Windows and Linux

The native entry points for handling Flutter method calls differ between platforms:

- On Windows, it’s

FlutterDocumentScanSdkPlugin::HandleMethodCall - On Linux, it’s

flutter_document_scan_sdk_plugin_handle_method_call

Since the Flutter headers and data types vary between Windows and Linux, the implementation must adapt accordingly.

Windows

#include "flutter_document_scan_sdk_plugin.h"

#include <windows.h>

#include <VersionHelpers.h>

#include <flutter/method_channel.h>

#include <flutter/plugin_registrar_windows.h>

#include <flutter/standard_method_codec.h>

#include <memory>

#include <sstream>

void FlutterDocumentScanSdkPlugin::HandleMethodCall(

const flutter::MethodCall<flutter::EncodableValue> &method_call,

std::unique_ptr<flutter::MethodResult<flutter::EncodableValue>> result){}

Linux

#include "include/flutter_document_scan_sdk/flutter_document_scan_sdk_plugin.h"

#include <flutter_linux/flutter_linux.h>

#include <gtk/gtk.h>

#include <sys/utsname.h>

#include <cstring>

static void flutter_document_scan_sdk_plugin_handle_method_call(

FlutterDocumentScanSdkPlugin *self,

FlMethodCall *method_call){}

To handle method calls, you’ll need to:

- Parse the method name and arguments

- Perform the requested action (e.g., initialize the license, detect documents, normalize images)

- Return the result using platform-specific APIs

For example, the license argument is a std::string on Windows, but a const char* on Linux.

-

Windows

const auto *arguments = std::get_if<EncodableMap>(method_call.arguments()); if (method_call.method_name().compare("init") == 0) { std::string license; int ret = 0; if (arguments) { auto license_it = arguments->find(EncodableValue("key")); if (license_it != arguments->end()) { license = std::get<std::string>(license_it->second); } ret = manager->SetLicense(license.c_str()); } result->Success(EncodableValue(ret)); } ... -

Linux

if (strcmp(method, "init") == 0) { if (fl_value_get_type(args) != FL_VALUE_TYPE_MAP) { return; } FlValue *value = fl_value_lookup_string(args, "key"); if (value == nullptr) { return; } const char *license = fl_value_get_string(value); int ret = self->manager->SetLicense(license); g_autoptr(FlValue) result = fl_value_new_int(ret); response = FL_METHOD_RESPONSE(fl_method_success_response_new(result)); } ...

Next, you’ll define a document_manager.h file to implement the actual logic using the Dynamsoft Capture Vision SDK. Since this SDK provides a consistent C++ API across platforms, most of the logic can be shared. However, returning data to Flutter still requires platform-specific wrapping — using EncodableMap for Windows and FlValue for Linux.

-

Import the Dynamsoft header files:

#include "DynamsoftCaptureVisionRouter.h" #include "DynamsoftUtility.h" using namespace std; using namespace dynamsoft::ddn; using namespace dynamsoft::license; using namespace dynamsoft::cvr; using namespace dynamsoft::utility; using namespace dynamsoft::basic_structures; -

Initialize and destroy the SDK components, and set the license key:

class DocumentManager { public: ~DocumentManager() { if (cvr != NULL) { delete cvr; cvr = NULL; } if (listener) { delete listener; listener = NULL; } if (fileFetcher) { delete fileFetcher; fileFetcher = NULL; } if (capturedReceiver) { delete capturedReceiver; capturedReceiver = NULL; } if (processor) { delete processor; processor = NULL; } }; int SetLicense(const char *license) { char errorMsgBuffer[512]; int ret = CLicenseManager::InitLicense(license, errorMsgBuffer, 512); printf("InitLicense: %s\n", errorMsgBuffer); if (ret) return ret; cvr = new CCaptureVisionRouter; fileFetcher = new CFileFetcher(); ret = cvr->SetInput(fileFetcher); if (ret) { printf("SetInput error: %d\n", ret); } capturedReceiver = new MyCapturedResultReceiver; ret = cvr->AddResultReceiver(capturedReceiver); if (ret) { printf("AddResultReceiver error: %d\n", ret); } listener = new MyImageSourceStateListener(cvr, capturedReceiver); ret = cvr->AddImageSourceStateListener(listener); if (ret) { printf("AddImageSourceStateListener error: %d\n", ret); } processor = new CImageProcessor(); return ret; } private: MyCapturedResultReceiver *capturedReceiver; MyImageSourceStateListener *listener; CFileFetcher *fileFetcher; CCaptureVisionRouter *cvr; CImageProcessor *processor; }; -

Detect document edges with

StartCapturing()and retrieve the results from a customMyCapturedResultReceiver. This receiver must convert SDK result structures into Flutter-compatible data types.-

On Windows, results are wrapped into

EncodableMapand returned viaMethodResult::Success().class MyCapturedResultReceiver : public CCapturedResultReceiver { public: vector<CCapturedResult *> results; mutex results_mutex; public: void OnCapturedResultReceived(CCapturedResult *pResult) override { pResult->Retain(); std::lock_guard<std::mutex> lock(results_mutex); results.push_back(pResult); } }; class MyImageSourceStateListener : public CImageSourceStateListener { private: CCaptureVisionRouter *m_router; MyCapturedResultReceiver *m_receiver; public: vector<std::unique_ptr<flutter::MethodResult<flutter::EncodableValue>>> pendingResults = {}; MyImageSourceStateListener(CCaptureVisionRouter *router, MyCapturedResultReceiver *receiver) { m_router = router; m_receiver = receiver; } void OnImageSourceStateReceived(ImageSourceState state) { if (state == ISS_EXHAUSTED) { m_router->StopCapturing(); std::unique_ptr<flutter::MethodResult<flutter::EncodableValue>> sender = std::move(pendingResults.front()); pendingResults.erase(pendingResults.begin()); EncodableValue out; for (auto *result : m_receiver->results) { CProcessedDocumentResult *pResults = result->GetProcessedDocumentResult(); if (pResults) { int contourCount = pResults->GetDetectedQuadResultItemsCount(); if (contourCount > 0) { out = CreateContourList(result); } pResults->Release(); } result->Release(); } m_receiver->results.clear(); sender->Success(out); } } EncodableValue CreateContourList(CCapturedResult *capturedResult) { EncodableList contours; CProcessedDocumentResult *pResults = capturedResult->GetProcessedDocumentResult(); int count = pResults->GetDetectedQuadResultItemsCount(); for (int i = 0; i < count; i++) { EncodableMap out; const CDetectedQuadResultItem *quadResult = pResults->GetDetectedQuadResultItem(i); int confidence = quadResult->GetConfidenceAsDocumentBoundary(); CPoint *points = quadResult->GetLocation().points; int x1 = points[0][0]; int y1 = points[0][1]; int x2 = points[1][0]; int y2 = points[1][1]; int x3 = points[2][0]; int y3 = points[2][1]; int x4 = points[3][0]; int y4 = points[3][1]; out[EncodableValue("confidence")] = EncodableValue(confidence); out[EncodableValue("x1")] = EncodableValue(x1); out[EncodableValue("y1")] = EncodableValue(y1); out[EncodableValue("x2")] = EncodableValue(x2); out[EncodableValue("y2")] = EncodableValue(y2); out[EncodableValue("x3")] = EncodableValue(x3); out[EncodableValue("y3")] = EncodableValue(y3); out[EncodableValue("x4")] = EncodableValue(x4); out[EncodableValue("y4")] = EncodableValue(y4); contours.push_back(out); } return contours; } }; void start(const char *templateName) { if (!cvr) return; char errorMsg[512] = {0}; int errorCode = cvr->StartCapturing(templateName, false, errorMsg, 512); if (errorCode != 0) { printf("StartCapturing: %s\n", errorMsg); } } void DetectBuffer(std::unique_ptr<flutter::MethodResult<flutter::EncodableValue>> &pendingResult, const unsigned char *buffer, int width, int height, int stride, int format, int rotation) { if (!cvr) { EncodableList out; pendingResult->Success(out); return; } listener->pendingResults.push_back(std::move(pendingResult)); CImageData *imageData = new CImageData(stride * height, buffer, width, height, stride, getPixelFormat(format), rotation); fileFetcher->SetFile(imageData); delete imageData; start(CPresetTemplate::PT_DETECT_DOCUMENT_BOUNDARIES); } -

On Linux, results are built as

FlValuemaps and returned usingfl_method_success_response_new().class MyCapturedResultReceiver : public CCapturedResultReceiver { public: std::vector<CCapturedResult *> results; std::mutex results_mutex; void OnCapturedResultReceived(CCapturedResult *pResult) override { pResult->Retain(); std::lock_guard<std::mutex> lock(results_mutex); results.push_back(pResult); } }; class MyImageSourceStateListener : public CImageSourceStateListener { private: CCaptureVisionRouter *m_router; MyCapturedResultReceiver *m_receiver; FlMethodCall *m_method_call; public: MyImageSourceStateListener(CCaptureVisionRouter *router, MyCapturedResultReceiver *receiver) : m_router(router), m_receiver(receiver), m_method_call(nullptr) {} ~MyImageSourceStateListener() { if (m_method_call) { g_object_unref(m_method_call); } } void OnImageSourceStateReceived(ImageSourceState state) override { if (state == ISS_EXHAUSTED) { m_router->StopCapturing(); FlValue *out = fl_value_new_list(); for (auto *result : m_receiver->results) { CProcessedDocumentResult *pResults = result->GetProcessedDocumentResult(); if (pResults) { int contourCount = pResults->GetDetectedQuadResultItemsCount(); if (contourCount > 0) { out = CreateContourList(result); } pResults->Release(); } result->Release(); } m_receiver->results.clear(); g_autoptr(FlMethodResponse) response = FL_METHOD_RESPONSE(fl_method_success_response_new(out)); if (m_method_call) { fl_method_call_respond(m_method_call, response, nullptr); g_object_unref(m_method_call); // Release the method call m_method_call = nullptr; } } } void SetMethodCall(FlMethodCall *method_call) { if (m_method_call) { g_object_unref(m_method_call); } m_method_call = method_call; if (m_method_call) { g_object_ref(m_method_call); // Retain the method call } } FlValue *CreateContourList(const CCapturedResult *capturedResult) { FlValue *contours = fl_value_new_list(); CProcessedDocumentResult *pResults = capturedResult->GetProcessedDocumentResult(); int count = pResults->GetDetectedQuadResultItemsCount(); for (int i = 0; i < count; i++) { FlValue *out = fl_value_new_map(); const CDetectedQuadResultItem *quadResult = pResults->GetDetectedQuadResultItem(i); int confidence = quadResult->GetConfidenceAsDocumentBoundary(); CQuadrilateral location = quadResult->GetLocation(); CPoint *points = location.points; int x1 = points[0][0]; int y1 = points[0][1]; int x2 = points[1][0]; int y2 = points[1][1]; int x3 = points[2][0]; int y3 = points[2][1]; int x4 = points[3][0]; int y4 = points[3][1]; fl_value_set_string_take(out, "confidence", fl_value_new_int(confidence)); fl_value_set_string_take(out, "x1", fl_value_new_int(x1)); fl_value_set_string_take(out, "y1", fl_value_new_int(y1)); fl_value_set_string_take(out, "x2", fl_value_new_int(x2)); fl_value_set_string_take(out, "y2", fl_value_new_int(y2)); fl_value_set_string_take(out, "x3", fl_value_new_int(x3)); fl_value_set_string_take(out, "y3", fl_value_new_int(y3)); fl_value_set_string_take(out, "x4", fl_value_new_int(x4)); fl_value_set_string_take(out, "y4", fl_value_new_int(y4)); fl_value_append_take(contours, out); } return contours; } }; void start(const char *templateName) { if (!cvr) return; char errorMsg[512] = {0}; int errorCode = cvr->StartCapturing(templateName, false, errorMsg, 512); if (errorCode != 0) { printf("StartCapturing: %s\n", errorMsg); } } void DetectBuffer(FlMethodCall *method_call, const unsigned char *buffer, int width, int height, int stride, int format, int rotation) { if (!cvr) { FlValue *out = fl_value_new_list(); g_autoptr(FlMethodResponse) response = FL_METHOD_RESPONSE(fl_method_success_response_new(out)); fl_method_call_respond(method_call, response, nullptr); return; } CImageData *imageData = new CImageData(stride * height, buffer, width, height, stride, getPixelFormat(format), rotation); fileFetcher->SetFile(imageData); delete imageData; listener->SetMethodCall(method_call); start(CPresetTemplate::PT_DETECT_DOCUMENT_BOUNDARIES); }

-

- Call

Capture()with a template that includes ROI (region of interest) coordinates. These coordinates are passed as a quadrilateral structure.SimplifiedCaptureVisionSettings settings = {}; cvr->GetSimplifiedSettings(CPresetTemplate::PT_NORMALIZE_DOCUMENT, &settings); CQuadrilateral quad; quad.points[0][0] = x1; quad.points[0][1] = y1; quad.points[1][0] = x2; quad.points[1][1] = y2; quad.points[2][0] = x3; quad.points[2][1] = y3; quad.points[3][0] = x4; quad.points[3][1] = y4; settings.roi = quad; settings.roiMeasuredInPercentage = 0; settings.documentSettings.colourMode = (ImageColourMode)color; char errorMsgBuffer[512]; int ret = cvr->UpdateSettings(CPresetTemplate::PT_NORMALIZE_DOCUMENT, &settings, errorMsgBuffer, 512); if (ret) { printf("Error: %s\n", errorMsgBuffer); } CImageData *imageData = new CImageData(stride * height, buffer, width, height, stride, getPixelFormat(format), rotation); CCapturedResult *capturedResult = cvr->Capture(imageData, CPresetTemplate::PT_NORMALIZE_DOCUMENT); delete imageData; -

Create a Flutter C++ map object to pass the normalized image data to Flutter:

Windows:

EncodableMap map; map[EncodableValue("width")] = EncodableValue(width); map[EncodableValue("height")] = EncodableValue(height); map[EncodableValue("stride")] = EncodableValue(stride); map[EncodableValue("format")] = EncodableValue(format); map[EncodableValue("orientation")] = EncodableValue(orientation); map[EncodableValue("length")] = EncodableValue(length); std::vector<uint8_t> rawBytes(rgba, rgba + width * height * 4); map[EncodableValue("data")] = rawBytes;Linux:

FlValue* result = fl_value_new_map (); fl_value_set_string_take (result, "width", fl_value_new_int(width)); fl_value_set_string_take (result, "height", fl_value_new_int(height)); fl_value_set_string_take (result, "stride", fl_value_new_int(stride)); fl_value_set_string_take (result, "format", fl_value_new_int(format)); fl_value_set_string_take (result, "orientation", fl_value_new_int(orientation)); fl_value_set_string_take (result, "length", fl_value_new_int(length)); fl_value_set_string_take (result, "data", fl_value_new_uint8_list(rgba, width * height * 4));Before passing image data back to Flutter:

-

Convert binary images to grayscale, if necessary

unsigned char *grayscale = new unsigned char[width * height]; binary2grayscale(data, grayscale, width, height, stride, length); void binary2grayscale(unsigned char* data, unsigned char* output, int width, int height, int stride, int length) { int index = 0; int skip = stride * 8 - width; int shift = 0; int n = 1; for (int i = 0; i < length; ++i) { unsigned char b = data[i]; int byteCount = 7; while (byteCount >= 0) { int tmp = (b & (1 << byteCount)) >> byteCount; if (shift < stride * 8 * n - skip) { if (tmp == 1) output[index] = 255; else output[index] = 0; index += 1; } byteCount -= 1; shift += 1; } if (shift == stride * 8 * n) { n += 1; } } } -

Convert pixel formats to

rgba8888orbgra8888, since these are the only formats supported by Flutter’sdecodeImageFromPixels()methodunsigned char *rgba = new unsigned char[width * height * 4]; memset(rgba, 0, width * height * 4); if (format == IPF_RGB_888) { int dataIndex = 0; for (int i = 0; i < height; i++) { for (int j = 0; j < width; j++) { int index = i * width + j; rgba[index * 4] = data[dataIndex + 2]; // red rgba[index * 4 + 1] = data[dataIndex + 1]; // green rgba[index * 4 + 2] = data[dataIndex]; // blue rgba[index * 4 + 3] = 255; // alpha dataIndex += 3; } } } else if (format == IPF_GRAYSCALED) { int dataIndex = 0; for (int i = 0; i < height; i++) { for (int j = 0; j < width; j++) { int index = i * width + j; rgba[index * 4] = data[dataIndex]; rgba[index * 4 + 1] = data[dataIndex]; rgba[index * 4 + 2] = data[dataIndex]; rgba[index * 4 + 3] = 255; dataIndex += 1; } } } else if (format == IPF_BINARY) { unsigned char *grayscale = new unsigned char[width * height]; binary2grayscale(data, grayscale, width, height, stride, length); int dataIndex = 0; for (int i = 0; i < height; i++) { for (int j = 0; j < width; j++) { int index = i * width + j; rgba[index * 4] = grayscale[dataIndex]; rgba[index * 4 + 1] = grayscale[dataIndex]; rgba[index * 4 + 2] = grayscale[dataIndex]; rgba[index * 4 + 3] = 255; dataIndex += 1; } } free(grayscale); }

-

Testing the Flutter Document Scan SDK Plugin on Windows and Linux

Since the web example code is shared with the desktop platforms, no changes are needed. You can directly run the existing example on Windows or Linux:

flutter run -d windows # or flutter run -d linux

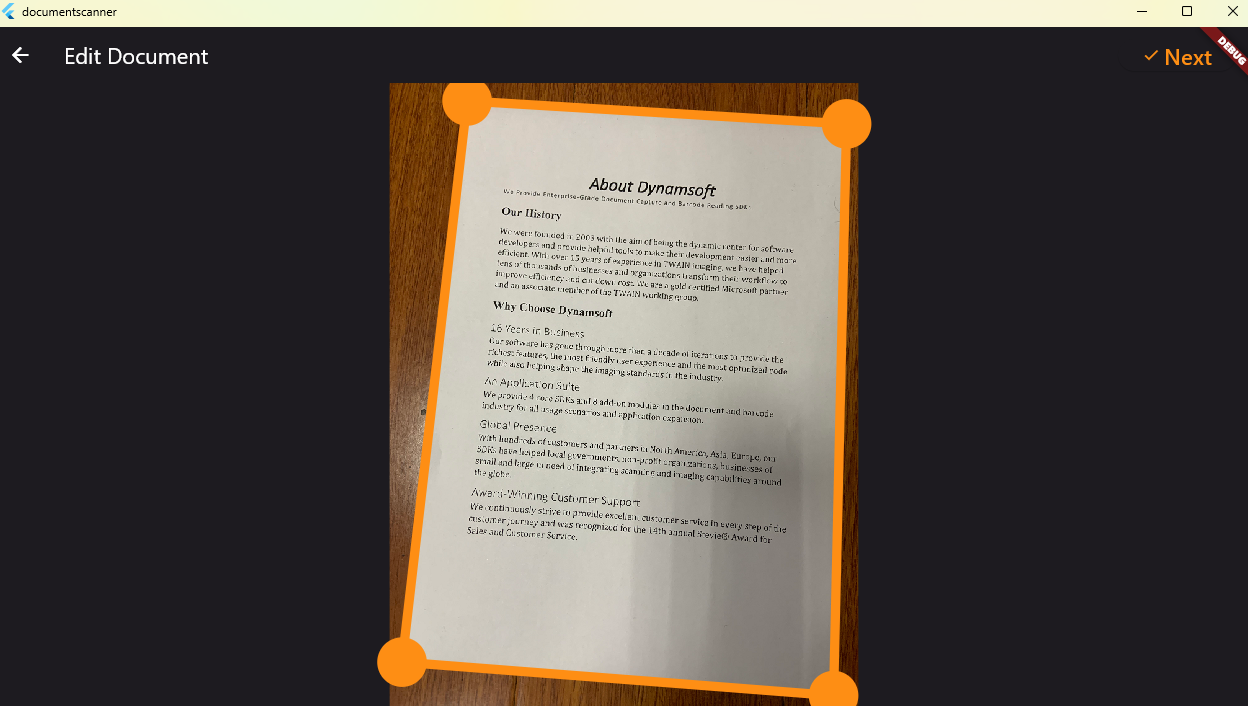

Document Edge Detection

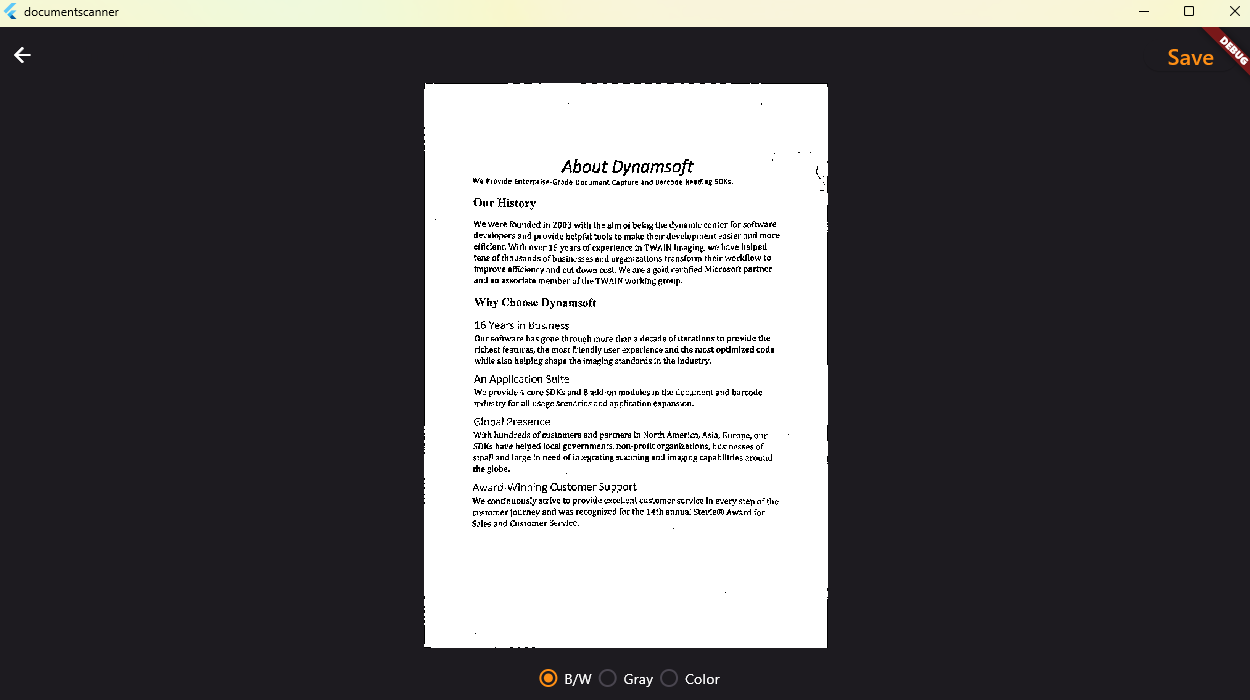

Document Normalization