How to Build a Capacitor Plugin for Camera Preview

Capacitor is an open-source native runtime created by the Ionic team for building Web Native apps. We can use it to create cross-platform iOS, Android, and Progressive Web Apps with JavaScript, HTML, and CSS.

Using Capacitor plugins, we can use JavaScript to interface directly with native APIs. In this article, we are going to build a camera preview Capacitor plugin using Dynamsoft Camera Enhancer.

Build a Camera Preview Capacitor Plugin

Let’s do this in steps.

New Plugin Project

In a new terminal, run the following:

npm init @capacitor/plugin

We will be prompted to input relevant project info.

√ What should be the npm package of your plugin?

... capacitor-plugin-dynamsoft-camera-preview

√ What directory should be used for your plugin?

... capacitor-plugin-dynamsoft-camera-preview

√ What should be the Package ID for your plugin?

Package IDs are unique identifiers used in apps and plugins. For plugins,

they're used as a Java namespace. They must be in reverse domain name

notation, generally representing a domain name that you or your company owns.

... com.dynamsoft.capacitor.dce

√ What should be the class name for your plugin?

... CameraPreview

√ What is the repository URL for your plugin?

... https://github.com/tony-xlh/capacitor-plugin-camera-preview

Create an Example Project

In order to test the plugin, we can create an example project.

Under the root of the plugin, create an example folder and start a webpack project.

git clone https://github.com/wbkd/webpack-starter

mv webpack-starter example # rename webpack-starter to example

Install the Capacitor plugin to the example project:

cd example

npm install ..

Then, we can run npm start to test the example project.

Next, we are going to implement the Web, Android and iOS parts of the plugin.

Web Implementation

Add Dynamsoft Camera Enhancer as a Dependency

npm install dynamsoft-camera-enhancer@3.1.0

Write Definitions

Define interfaces in src/definitions.ts. The CameraPreviewPlugin provides methods to initialize Dynamsoft Camera Enhancer and control the camera using it.

export interface CameraPreviewPlugin {

initialize(): Promise<void>;

getResolution(): Promise<{resolution: string}>;

setResolution(options: {resolution: number}): Promise<void>;

getAllCameras(): Promise<{cameras: string[]}>;

getSelectedCamera(): Promise<{selectedCamera: string}>;

selectCamera(options: {cameraID: string; }): Promise<void>;

setScanRegion(options: {region:ScanRegion}): Promise<void>;

setZoom(options: {factor: number}): Promise<void>;

setFocus(options: {x: number, y: number}): Promise<void>;

/**

* Web Only

*/

setDefaultUIElementURL(url:string): Promise<void>;

startCamera(): Promise<void>;

stopCamera(): Promise<void>;

pauseCamera(): Promise<void>;

resumeCamera(): Promise<void>;

/**

* take a snapshot as base64.

*/

takeSnapshot(options:{quality?:number}): Promise<{base64:string}>;

/**

* take a snapshot as DCEFrame. Web Only

*/

takeSnapshot2(): Promise<{frame:DCEFrame}>;

takePhoto(): Promise<{base64:string}>;

toggleTorch(options: {on: boolean}): Promise<void>;

requestCameraPermission(): Promise<void>;

isOpen():Promise<{isOpen:boolean}>;

addListener(

eventName: 'onPlayed',

listenerFunc: onPlayedListener,

): Promise<PluginListenerHandle> & PluginListenerHandle;

}

/**

* measuredByPercentage: 0 in pixel, 1 in percent

*/

export interface ScanRegion{

left: number;

top: number;

right: number;

bottom: number;

measuredByPercentage: number;

}

export enum EnumResolution {

RESOLUTION_AUTO = 0,

RESOLUTION_480P = 1,

RESOLUTION_720P = 2,

RESOLUTION_1080P = 3,

RESOLUTION_2K = 4,

RESOLUTION_4K = 5

}

Implement the Interfaces

-

Initialization.

The

initializemethod will create an instance of Dynamsoft Camera Enhancer, turn on theplayedevent (triggered when the camera is started) and set up its UI elements.CameraEnhancer.defaultUIElementURL = "https://cdn.jsdelivr.net/npm/dynamsoft-camera-enhancer@3.1.0/dist/dce.ui.html"; export class CameraPreviewWeb extends WebPlugin implements CameraPreviewPlugin { private camera:CameraEnhancer | undefined; async initialize(): Promise<void> { this.camera = await CameraEnhancer.createInstance(); this.camera.on("played", (playCallBackInfo:PlayCallbackInfo) => { this.notifyListeners("onPlayed", {resolution:playCallBackInfo.width+"x"+playCallBackInfo.height}); }); await this.camera.setUIElement(CameraEnhancer.defaultUIElementURL); this.camera.getUIElement().getElementsByClassName("dce-btn-close")[0].remove(); this.camera.getUIElement().getElementsByClassName("dce-sel-camera")[0].remove(); this.camera.getUIElement().getElementsByClassName("dce-sel-resolution")[0].remove(); this.camera.getUIElement().getElementsByClassName("dce-msg-poweredby")[0].remove(); } } async setDefaultUIElementURL(url: string): Promise<void> { CameraEnhancer.defaultUIElementURL = url; } -

Getting and setting the resolution.

async getResolution(): Promise<{ resolution: string; }> { if (this.camera) { let rsl = this.camera.getResolution(); let resolution:string = rsl[0] + "x" + rsl[1]; return {resolution: resolution}; }else{ throw new Error('DCE not initialized'); } } async setResolution(options: { resolution: number; }): Promise<void> { if (this.camera) { let res = options.resolution; let width = 1280; //default resolution let height = 720; if (res == EnumResolution.RESOLUTION_480P){ width = 640; height = 480; } else if (res == EnumResolution.RESOLUTION_720P){ width = 1280; height = 720; } else if (res == EnumResolution.RESOLUTION_1080P){ width = 1920; height = 1080; } else if (res == EnumResolution.RESOLUTION_2K){ width = 2560; height = 1440; } else if (res == EnumResolution.RESOLUTION_4K){ width = 3840; height = 2160; } await this.camera.setResolution(width,height); return; } else { throw new Error('DCE not initialized'); } } -

Getting all cameras, getting the selected camera and selecting a camera.

async getAllCameras(): Promise<{ cameras: string[]; }> { if (this.camera) { let cameras = await this.camera.getAllCameras(); let labels:string[] = []; cameras.forEach(camera => { labels.push(camera.label); }); return {cameras:labels}; }else { throw new Error('DCE not initialized'); } } async getSelectedCamera(): Promise<{ selectedCamera: string; }> { if (this.camera) { let cameraInfo = this.camera.getSelectedCamera(); return {selectedCamera:cameraInfo.label}; }else { throw new Error('DCE not initialized'); } } async selectCamera(options: { cameraID: string; }): Promise<void> { if (this.camera) { let cameras = await this.camera.getAllCameras() for (let index = 0; index < cameras.length; index++) { const camera = cameras[index]; if (camera.label === options.cameraID) { await this.camera.selectCamera(camera); return; } } }else { throw new Error('DCE not initialized'); } } -

Setting a scan region. The camera frame will be cropped according to the region.

async setScanRegion(options: { region: ScanRegion; }): Promise<void> { if (this.camera){ this.camera.setScanRegion({ regionLeft:options.region.left, regionTop:options.region.top, regionRight:options.region.right, regionBottom:options.region.bottom, regionMeasuredByPercentage: options.region.measuredByPercentage }) }else { throw new Error('DCE not initialized'); } } -

Setting zoom and focus.

async setZoom(options: { factor: number; }): Promise<void> { if (this.camera) { await this.camera.setZoom(options.factor); return; }else { throw new Error('DCE not initialized'); } } async setFocus(): Promise<void> { throw new Error('Method not implemented.'); //not supported on Web } -

Using the torch.

async toggleTorch(options: { on: boolean; }): Promise<void> { if (this.camera) { try{ if (options["on"]){ await this.camera.turnOnTorch(); }else{ await this.camera.turnOffTorch(); } } catch (e){ throw new Error("Torch unsupported"); } } } -

Opening/Closing/Resuming/Pausing the camera.

async startCamera(): Promise<void> { if (this.camera) { await this.camera.open(true); }else { throw new Error('DCE not initialized'); } } async stopCamera(): Promise<void> { if (this.camera) { this.camera.close(true); }else { throw new Error('DCE not initialized'); } } async pauseCamera(): Promise<void> { if (this.camera) { this.camera.pause(); }else { throw new Error('DCE not initialized'); } } async resumeCamera(): Promise<void> { if (this.camera) { this.camera.resume(); }else { throw new Error('DCE not initialized'); } } -

Taking a snapshot.

The

takeSnapshotmethod will take a snapshot of the camera preview as base64.The

takeSnapshot2method will take a snapshot of the camera preview as DCEFrame, which is only supported on Web.async takeSnapshot(options:{quality?:number}): Promise<{ base64: string;}> { if (this.camera) { let desiredQuality = 85; if (options.quality) { desiredQuality = options.quality; } let dataURL = this.camera.getFrame().toCanvas().toDataURL('image/jpeg',desiredQuality); let base64 = dataURL.replace("data:image/jpeg;base64,",""); return {base64:base64}; }else{ throw new Error('DCE not initialized'); } } async takeSnapshot2(): Promise<{ frame: DCEFrame; }> { if (this.camera) { let frame = this.camera.getFrame(); return {frame:frame}; }else { throw new Error('DCE not initialized'); } } -

Requesting camera permission.

Call

getUserMediaso that the browser will ask for camera permission.async requestCameraPermission(): Promise<void> { const constraints = {video: true, audio: false}; const stream = await navigator.mediaDevices.getUserMedia(constraints); const tracks = stream.getTracks(); for (let i=0;i<tracks.length;i++) { const track = tracks[i]; track.stop(); // stop the opened camera } }

Update the Example to Work as a Barcode Scanner

In the example project, we are going to use the plugin to start the camera and use the JavaScript edition of Dynamsoft Barcode Reader to read barcodes.

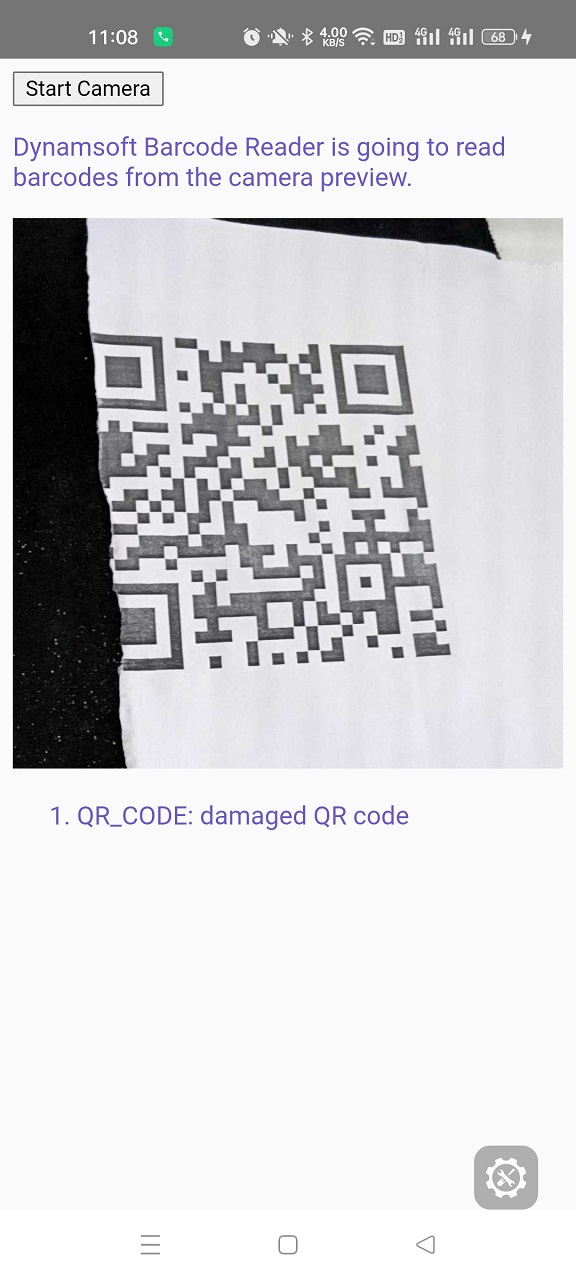

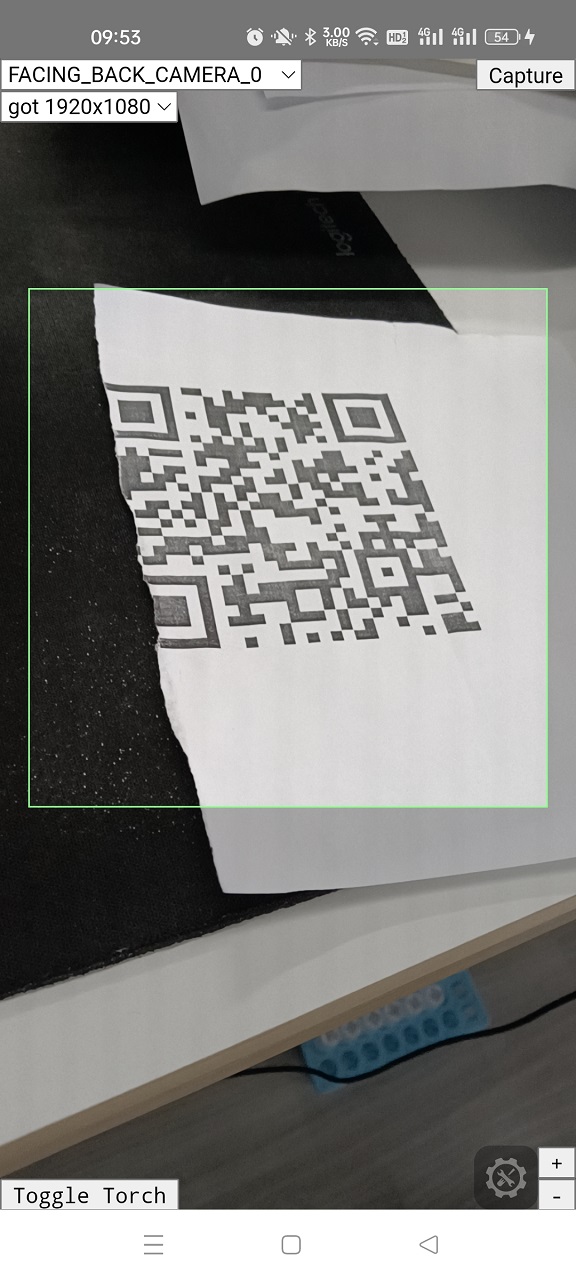

Here are the screenshots of the final result.

Key parts of the example’s code:

-

Initialization.

let onPlayedListener; let reader; async function initialize(){ startBtn.innerText = "Initializing..."; await CameraPreview.initialize(); if (onPlayedListener) { await onPlayedListener.remove(); } onPlayedListener = await CameraPreview.addListener('onPlayed', async (res) => { console.log(res); updateResolutionSelect(res.resolution); updateCameraSelect(); }); await CameraPreview.requestCameraPermission(); await CameraPreview.setScanRegion({region:{left:10,top:20,right:90,bottom:65,measuredByPercentage:1}}); await loadCameras(); loadResolutions(); await initDBR(); startBtn.innerText = "Start Camera"; startBtn.disabled = ""; } async function initDBR() { BarcodeReader.engineResourcePath = "https://cdn.jsdelivr.net/npm/dynamsoft-javascript-barcode@9.3.0/dist/"; BarcodeReader.license = "LICENSE-KEY"; reader = await BarcodeReader.createInstance(); } -

Starting the camera and setting up an interval to read barcodes from the camera frames.

let interval; let decoding = false; async function startCamera(){ await CameraPreview.startCamera(); startDecoding(); } function startDecoding(){ decoding = false; interval = setInterval(captureAndDecode,100); } function stopDecoding(){ clearInterval(interval); decoding = false; } async function captureAndDecode(){ if (reader === null) { return; } if (decoding === true) { return; } let results = []; let frame; let base64; decoding = true; try { if (Capacitor.isNativePlatform()) { let result = await CameraPreview.takeSnapshot({quality:50}); base64 = result.base64; results = await reader.decodeBase64String(base64); } else { let result = await CameraPreview.takeSnapshot2(); frame = result.frame; results = await reader.decode(frame); } } catch (error) { console.log(error); } decoding = false; if (results.length>0){ let dataURL; if (frame) { dataURL = frame.toCanvas().toDataURL('image/jpeg'); } if (base64) { dataURL = "data:image/jpeg;base64," + base64; } stopDecoding(); returnToHomeWithBarcodeResults(dataURL, results); } }

The example can now work as a barcode scanner in the browser. Next, we are going to implement the Android and iOS parts of the plugin and make the example work on Android and iOS as native apps as well.

Native Quirks

To display the web elements above the camera view, we have to put the WebView below the camera view. The camera view is set to fullscreen and the background color of WebView is set to transparent.

To pass the data of camera preview to the WebView, we have to serialize it as base64.

Android Implementation

Add Dynamsoft Camera Enhancer as a Dependency

Open android/build.gradle to add the Dynamsoft Camera Enhancer dependency:

rootProject.allprojects {

repositories {

maven {

url "https://download2.dynamsoft.com/maven/aar"

}

}

}

dependencies {

def camerax_version = '1.1.0'

implementation "androidx.camera:camera-core:$camerax_version"

implementation "androidx.camera:camera-camera2:$camerax_version"

implementation "androidx.camera:camera-lifecycle:$camerax_version"

implementation "androidx.camera:camera-view:$camerax_version"

implementation 'com.dynamsoft:dynamsoftcameraenhancer:3.0.1'

}

Implementation

Open CameraPreviewPlugin.java to implement the plugin.

-

Initialization.

The

initializemethod will create an instance of Dynamsoft Camera Enhancer and add the camera view below the WebView.@PluginMethod public void initialize(PluginCall call) { getActivity().runOnUiThread(new Runnable() { public void run() { mCameraEnhancer = new CameraEnhancer(getActivity()); mCameraView = new DCECameraView(getActivity()); mCameraEnhancer.setCameraView(mCameraView); FrameLayout.LayoutParams cameraPreviewParams = new FrameLayout.LayoutParams( FrameLayout.LayoutParams.WRAP_CONTENT, FrameLayout.LayoutParams.WRAP_CONTENT ); ((ViewGroup) bridge.getWebView().getParent()).addView(mCameraView,cameraPreviewParams); bridge.getWebView().bringToFront(); call.resolve(); } }); } -

Add a

triggerOnPlayedmethod which will be called when the camera starts, the resolution changes or the camera is switched.private void triggerOnPlayed(){ Size res = mCameraEnhancer.getResolution(); if (res != null) { JSObject onPlayedResult = new JSObject(); onPlayedResult.put("resolution",res.getWidth() + "x" + res.getHeight()); Log.d("DBR","resolution:" + res.getWidth() + "x" + res.getHeight()); notifyListeners("onPlayed",onPlayedResult); } } -

Getting and setting the resolution.

@PluginMethod public void setResolution(PluginCall call){ if (call.hasOption("resolution")){ try { Runnable setResolutionRunnable = new Runnable() { public void run() { try { mCameraEnhancer.setResolution(EnumResolution.fromValue(call.getInt("resolution"))); } catch (CameraEnhancerException e) { e.printStackTrace(); } synchronized(this) { this.notify(); } } }; synchronized( setResolutionRunnable ) { getActivity().runOnUiThread(setResolutionRunnable); setResolutionRunnable.wait(); } triggerOnPlayed(); } catch (InterruptedException e) { e.printStackTrace(); call.reject(e.getMessage()); } } JSObject result = new JSObject(); result.put("success",true); call.resolve(result); } @PluginMethod public void getResolution(PluginCall call){ if (mCameraEnhancer == null) { call.reject("DCE not initialized"); }else{ String res = mCameraEnhancer.getResolution().getWidth()+"x"+mCameraEnhancer.getResolution().getHeight(); JSObject result = new JSObject(); result.put("resolution",res); call.resolve(result); } } -

Getting all cameras, getting the selected camera and selecting a camera.

@PluginMethod public void getAllCameras(PluginCall call){ if (mCameraEnhancer == null) { call.reject("not initialized"); }else { JSObject result = new JSObject(); JSArray cameras = new JSArray(); for (String camera: mCameraEnhancer.getAllCameras()) { cameras.put(camera); } result.put("cameras",cameras); call.resolve(result); } } @PluginMethod public void getSelectedCamera(PluginCall call){ if (mCameraEnhancer == null) { call.reject("not initialized"); }else{ JSObject result = new JSObject(); result.put("selectedCamera",mCameraEnhancer.getSelectedCamera()); call.resolve(result); } } @PluginMethod public void selectCamera(PluginCall call){ if (call.hasOption("cameraID")){ try { Runnable selectCameraRunnable = new Runnable() { public void run() { try { mCameraEnhancer.selectCamera(call.getString("cameraID")); } catch (CameraEnhancerException e) { e.printStackTrace(); } synchronized(this) { this.notify(); } } }; synchronized( selectCameraRunnable ) { getActivity().runOnUiThread(selectCameraRunnable); selectCameraRunnable.wait(); } triggerOnPlayed(); } catch (InterruptedException e) { e.printStackTrace(); call.reject(e.getMessage()); } } JSObject result = new JSObject(); result.put("success",true); call.resolve(result); } -

Setting a scan region.

@PluginMethod public void setScanRegion(PluginCall call){ if (mCameraEnhancer!=null) { getActivity().runOnUiThread(new Runnable() { public void run() { try { JSObject region = call.getObject("region"); com.dynamsoft.core.RegionDefinition scanRegion = new com.dynamsoft.core.RegionDefinition(); scanRegion.regionTop = region.getInt("top"); scanRegion.regionBottom = region.getInt("bottom"); scanRegion.regionLeft = region.getInt("left"); scanRegion.regionRight = region.getInt("right"); scanRegion.regionMeasuredByPercentage = region.getInt("measuredByPercentage"); mCameraEnhancer.setScanRegion(scanRegion); call.resolve(); } catch (Exception e) { e.printStackTrace(); call.reject(e.getMessage()); } } }); }else{ call.reject("DCE not initialized"); } } -

Setting zoom and focus.

@PluginMethod public void setZoom(PluginCall call){ if (call.hasOption("factor")) { Float factor = call.getFloat("factor"); try { mCameraEnhancer.setZoom(factor); } catch (CameraEnhancerException e) { e.printStackTrace(); call.reject(e.getMessage()); } } call.resolve(); } @PluginMethod public void setFocus(PluginCall call){ if (call.hasOption("x") && call.hasOption("y")) { Float x = call.getFloat("x"); Float y = call.getFloat("y"); try { mCameraEnhancer.setFocus(x,y); } catch (CameraEnhancerException e) { e.printStackTrace(); call.reject(e.getMessage()); } } call.resolve(); } -

Using the torch.

@PluginMethod public void toggleTorch(PluginCall call) { try{ if (call.getBoolean("on",true)){ mCameraEnhancer.turnOnTorch(); }else { mCameraEnhancer.turnOffTorch(); } call.resolve(); }catch (Exception e){ call.reject(e.getMessage()); } } -

Opening/Closing/Resuming/Pausing the camera.

@PluginMethod public void startCamera(PluginCall call) { getActivity().runOnUiThread(new Runnable() { public void run() { try { mCameraView.setVisibility(View.VISIBLE); mCameraEnhancer.open(); makeWebViewTransparent(); triggerOnPlayed(); call.resolve(); } catch (CameraEnhancerException e) { e.printStackTrace(); call.reject(e.getMessage()); } } }); } @PluginMethod public void stopCamera(PluginCall call) { try{ restoreWebViewBackground(); mCameraView.setVisibility(View.INVISIBLE); mCameraEnhancer.close(); call.resolve(); }catch (Exception e){ call.reject(e.getMessage()); } } @PluginMethod public void pauseScan(PluginCall call) { try{ mCameraEnhancer.pause(); call.resolve(); }catch (Exception e){ call.reject(e.getMessage()); } } @PluginMethod public void resumeScan(PluginCall call) { try{ mCameraEnhancer.resume(); call.resolve(); }catch (Exception e){ call.reject(e.getMessage()); } } private void makeWebViewTransparent(){ bridge.getWebView().setTag(bridge.getWebView().getBackground()); bridge.getWebView().setBackgroundColor(Color.TRANSPARENT); } private void restoreWebViewBackground(){ bridge.getWebView().setBackground((Drawable) bridge.getWebView().getTag()); } -

Taking a snapshot.

@PluginMethod public void takeSnapshot(PluginCall call){ try { if (mCameraEnhancer.getCameraState() == EnumCameraState.OPENED) { int desiredQuality = 85; if (call.hasOption("quality")) { desiredQuality = call.getInt("quality"); } Bitmap bitmap = mCameraEnhancer.getFrameFromBuffer(true).toBitmap(); String base64 = bitmap2Base64(bitmap, desiredQuality); JSObject result = new JSObject(); result.put("base64",base64); call.resolve(result); }else{ call.reject("camera is not open"); } } catch (Exception e) { e.printStackTrace(); call.reject(e.getMessage()); } } public static String bitmap2Base64(Bitmap bitmap,int quality) { ByteArrayOutputStream outputStream = new ByteArrayOutputStream(); bitmap.compress(Bitmap.CompressFormat.JPEG, quality, outputStream); return Base64.encodeToString(outputStream.toByteArray(), Base64.DEFAULT); } -

Requesting camera permission.

Update the Plugin annotation to add the camera permission alias:

@CapacitorPlugin( name = "CameraPreview", + permissions = { + @Permission(strings = { Manifest.permission.CAMERA }, alias = CameraPreviewPlugin.CAMERA), } )Request camera permission if it has not been granted.

@PluginMethod public void requestCameraPermission(PluginCall call) { boolean hasCameraPerms = getPermissionState(CAMERA) == PermissionState.GRANTED; if (hasCameraPerms == false) { String[] aliases = new String[] { CAMERA }; requestPermissionForAliases(aliases, call, "cameraPermissionsCallback"); }else{ call.resolve(); } } @PermissionCallback private void cameraPermissionsCallback(PluginCall call) { boolean hasCameraPerms = getPermissionState(CAMERA) == PermissionState.GRANTED; if (hasCameraPerms) { call.resolve(); }else { call.reject("Permission not granted."); } } -

Handling lifecycle events.

@Override protected void handleOnPause() { if (mCameraEnhancer!=null){ try { previousCameraStatus = mCameraEnhancer.getCameraState(); mCameraEnhancer.close(); } catch (CameraEnhancerException e) { e.printStackTrace(); } } super.handleOnPause(); } @Override protected void handleOnResume() { if (mCameraEnhancer!=null){ try { if (previousCameraStatus == EnumCameraState.OPENED) { mCameraEnhancer.open(); } } catch (CameraEnhancerException e) { e.printStackTrace(); } } super.handleOnResume(); }

After the implementation, we can update the example to run as an Android app with the following steps:

-

Drop Capacitor to the example:

npm install @capacitor/cli @capacitor/core npx cap init -

Add the Android project.

npm install @capacitor/android npx cap add android -

Build the web app and sync it to the Android project.

npm run build npx cap sync -

Run the app.

npx cap run android

iOS Implementation

Add Dynamsoft Camera Enhancer as a Dependency

Open CapacitorPluginDynamsoftCameraPreview.podspec to add the Dynamsoft Camera Enhancer dependency:

s.dependency 'DynamsoftCameraEnhancer', '= 3.0.1'

Write Definitions

Open CameraPreviewPlugin.m to define the methods.

CAP_PLUGIN(CameraPreviewPlugin, "CameraPreview",

CAP_PLUGIN_METHOD(initialize, CAPPluginReturnPromise);

CAP_PLUGIN_METHOD(getResolution, CAPPluginReturnPromise);

CAP_PLUGIN_METHOD(setResolution, CAPPluginReturnPromise);

CAP_PLUGIN_METHOD(getAllCameras, CAPPluginReturnPromise);

CAP_PLUGIN_METHOD(getSelectedCamera, CAPPluginReturnPromise);

CAP_PLUGIN_METHOD(selectCamera, CAPPluginReturnPromise);

CAP_PLUGIN_METHOD(setScanRegion, CAPPluginReturnPromise);

CAP_PLUGIN_METHOD(setZoom, CAPPluginReturnPromise);

CAP_PLUGIN_METHOD(setFocus, CAPPluginReturnPromise);

CAP_PLUGIN_METHOD(startCamera, CAPPluginReturnPromise);

CAP_PLUGIN_METHOD(stopCamera, CAPPluginReturnPromise);

CAP_PLUGIN_METHOD(pauseCamera, CAPPluginReturnPromise);

CAP_PLUGIN_METHOD(resumeCamera, CAPPluginReturnPromise);

CAP_PLUGIN_METHOD(takeSnapshot, CAPPluginReturnPromise);

CAP_PLUGIN_METHOD(takePhoto, CAPPluginReturnPromise);

CAP_PLUGIN_METHOD(toggleTorch, CAPPluginReturnPromise);

CAP_PLUGIN_METHOD(requestCameraPermission, CAPPluginReturnPromise););

)

Implementation

Open CameraPreviewPlugin.swift to implement the plugin.

-

Initialization.

The

initializemethod will create an instance of Dynamsoft Camera Enhancer and add the camera view below the WebView.@objc(CameraPreviewPlugin) public class CameraPreviewPlugin: CAPPlugin { var dce:DynamsoftCameraEnhancer! = nil var dceView:DCECameraView! = nil @objc func initialize(_ call: CAPPluginCall) { // Initialize a camera view for previewing video. DispatchQueue.main.sync { dceView = DCECameraView.init(frame: (bridge?.viewController?.view.bounds)!) self.webView!.superview!.insertSubview(dceView, belowSubview: self.webView!) dce = DynamsoftCameraEnhancer.init(view: dceView) dce.setResolution(EnumResolution.EnumRESOLUTION_720P) } call.resolve() } } -

Add a

triggerOnPlayedmethod which will be called when the camera starts, the resolution changes or the camera is switched.@objc func triggerOnPlayed() { if (dce != nil) { var ret = PluginCallResultData() let res = dce.getResolution() ret["resolution"] = res print("trigger on played") notifyListeners("onPlayed", data: ret) } } -

Getting and setting the resolution.

@objc func setResolution(_ call: CAPPluginCall) { if (dce == nil){ call.reject("DCE not initialized") }else{ let res = call.getInt("resolution") ?? -1 if res != -1 { let resolution = EnumResolution.init(rawValue: res) dce.setResolution(resolution!) triggerOnPlayed() } call.resolve() } } @objc func getResolution(_ call: CAPPluginCall) { if (dce == nil){ call.reject("DCE not initialized") }else{ var ret = PluginCallResultData() let res = dce.getResolution(); dce.getResolution() ret["resolution"] = res call.resolve(ret) } } -

Getting all cameras, getting the selected camera and selecting a camera.

@objc func getAllCameras(_ call: CAPPluginCall) { if (dce == nil){ call.reject("DCE not initialized") }else{ var ret = PluginCallResultData() let array = NSMutableArray(); array.addObjects(from: dce.getAllCameras()) ret["cameras"] = array call.resolve(ret) } } @objc func getSelectedCamera(_ call: CAPPluginCall) { if (dce == nil){ call.reject("DCE not initialized") }else{ var ret = PluginCallResultData() ret["selectedCamera"] = dce.getSelectedCamera() call.resolve(ret) } } @objc func selectCamera(_ call: CAPPluginCall) { if (dce == nil){ call.reject("DCE not initialized") }else{ let cameraID = call.getString("cameraID") ?? "" if cameraID != "" { try? dce.selectCamera(cameraID) triggerOnPlayed() } call.resolve() } } -

Setting a scan region.

@objc func setScanRegion(_ call: CAPPluginCall) { if (dce == nil){ call.reject("DCE not initialized") }else{ let region = call.getObject("region") let scanRegion = iRegionDefinition() scanRegion.regionTop = region?["top"] as! Int scanRegion.regionBottom = region?["bottom"] as! Int scanRegion.regionLeft = region?["left"] as! Int scanRegion.regionRight = region?["right"] as! Int scanRegion.regionMeasuredByPercentage = region?["measuredByPercentage"] as! Int try? dce.setScanRegion(scanRegion) call.resolve() } } -

Setting zoom and focus.

@objc func setZoom(_ call: CAPPluginCall) { if (dce == nil){ call.reject("DCE not initialized") }else{ let factor:CGFloat = CGFloat(call.getFloat("factor") ?? 1.0) dce.setZoom(factor) call.resolve() } } @objc func setFocus(_ call: CAPPluginCall) { if (dce == nil){ call.reject("DCE not initialized") }else{ let x = call.getFloat("x", -1.0); let y = call.getFloat("y", -1.0); if x != -1.0 && y != -1.0 { dce.setFocus(CGPoint(x: CGFloat(x), y: CGFloat(y))) } call.resolve() } } -

Using the torch.

@objc func toggleTorch(_ call: CAPPluginCall) { if (dce == nil){ call.reject("DCE not initialized") }else{ if call.getBool("on", true){ dce.turnOnTorch() } else{ dce.turnOffTorch() } call.resolve() } } -

Opening/Closing/Resuming/Pausing the camera.

@objc func startCamera(_ call: CAPPluginCall) { makeWebViewTransparent() if dce != nil { DispatchQueue.main.sync { dce.open() triggerOnPlayed() } }else{ call.reject("DCE not initialized") return } call.resolve() } @objc func stopCamera(_ call: CAPPluginCall) { restoreWebViewBackground() if (dce == nil){ call.reject("DCE not initialized") }else{ dce.close() call.resolve() } } @objc func resumeCamera(_ call: CAPPluginCall) { if (dce == nil){ call.reject("DCE not initialized") }else{ dce.resume() call.resolve() } } @objc func pauseCamera(_ call: CAPPluginCall) { if (dce == nil){ call.reject("DCE not initialized") }else{ dce.pause() call.resolve() } } func makeWebViewTransparent(){ DispatchQueue.main.async { self.bridge?.webView!.isOpaque = false self.bridge?.webView!.backgroundColor = UIColor.clear self.bridge?.webView!.scrollView.backgroundColor = UIColor.clear } } func restoreWebViewBackground(){ DispatchQueue.main.async { self.bridge?.webView!.isOpaque = true self.bridge?.webView!.backgroundColor = UIColor.white self.bridge?.webView!.scrollView.backgroundColor = UIColor.white } } -

Taking a snapshot.

The frame is not rotated and cropped by default on iOS. We have to do it manually. We also need to normalize the image to fix orientation.

@objc func takeSnapshot(_ call: CAPPluginCall) { if (dce == nil){ call.reject("DCE not initialized") }else{ let quality = call.getInt("quality",85) let frame = dce.getFrameFromBuffer(true) var ret = PluginCallResultData() if let img = frame.toUIImage() { let cropped = croppedUIImage(image: img, region: dce.getScanRegion(),degree: frame.orientation) let rotated = rotatedUIImage(image: cropped, degree: frame.orientation) let normalized = normalizedImage(rotated) let base64 = getBase64FromImage(image: normalized, quality: CGFloat(quality)) ret["base64"] = base64 call.resolve(ret) }else{ call.reject("Failed to take a snapshot") } } } func rotatedUIImage(image:UIImage, degree: Int) -> UIImage { var rotatedImage = UIImage() switch degree { case 90: rotatedImage = UIImage(cgImage: image.cgImage!, scale: 1.0, orientation: .right) case 180: rotatedImage = UIImage(cgImage: image.cgImage!, scale: 1.0, orientation: .down) default: return image } return rotatedImage } func croppedUIImage(image:UIImage, region:iRegionDefinition, degree: Int) -> UIImage { let cgImage = image.cgImage let imgWidth = Double(cgImage!.width) let imgHeight = Double(cgImage!.height) var regionLeft = Double(region.regionLeft) / 100.0 var regionTop = Double(region.regionTop) / 100.0 var regionWidth = Double(region.regionRight - region.regionLeft) / 100.0 var regionHeight = Double(region.regionBottom - region.regionTop) / 100.0 if degree == 90 { let temp1 = regionLeft regionLeft = regionTop regionTop = temp1 let temp2 = regionWidth regionWidth = regionHeight regionHeight = temp2 }else if degree == 180 { regionTop = 1.0 - regionTop } let left:Double = regionLeft * imgWidth let top:Double = regionTop * imgHeight let width:Double = regionWidth * imgWidth let height:Double = regionHeight * imgHeight // The cropRect is the rect of the image to keep, // in this case centered let cropRect = CGRect( x: left, y: top, width: width, height: height ).integral let cropped = cgImage?.cropping( to: cropRect )! let image = UIImage(cgImage: cropped!) return image } func normalizedImage(_ image:UIImage) -> UIImage { if image.imageOrientation == UIImage.Orientation.up { return image } UIGraphicsBeginImageContextWithOptions(image.size, false, image.scale) image.draw(in: CGRect(x:0,y:0,width:image.size.width,height:image.size.height)) let normalized = UIGraphicsGetImageFromCurrentImageContext()! UIGraphicsEndImageContext(); return normalized } func getBase64FromImage(image:UIImage, quality: CGFloat) -> String{ let dataTmp = image.jpegData(compressionQuality: quality) if let data = dataTmp { return data.base64EncodedString() } return "" }

After the implementation, we can update the example to run as an iOS app with the following steps:

-

Add the iOS project.

npm install @capacitor/ios npx cap add ios -

Build the web app and sync it to the iOS project.

npm run build npx cap sync -

Add camera permission by adding the following to

Info.plist.<key>NSCameraUsageDescription</key> <string>For text scanning</string> -

Run the app.

npx cap run ios