How to Take a Photo with Voice in JavaScript

When we are holding our phone to take a photo, the image may get blurred after we press the shutter button. There are other ways to trigger the capturing with the phone steady, like using an earphone’s volume button, detecting smile and detecting voice activity.

In this article, we are going to talk about how to take a photo with voice. We will build a web app for demonstration.

The following APIs are used:

- getUserMedia to access the camera and the microphone

- Web Audio API to analyse the audio from the microphone

Online demos:

- Demo 1. A basic demo which displays the audio graph and the pitch.

- Demo 2. A document scanning demo based on Dynamsoft’s Mobile Web Capture solution.

Video of demo 2:

Build a Web App to Take a Photo with Voice

Let’s do this in steps.

New HTML File

Create a new HTML file with the following content:

<!DOCTYPE html>

<html>

<head>

<title>Take a Photo with Your Voice</title>

<meta name="viewport" content="width=device-width,initial-scale=1.0,maximum-scale=1.0,user-scalable=0" />

<style>

#video {

height: 150px;

width: 300px;

}

#oscilloscope {

width: 150px;

height: 150px;

}

.output {

display: flex;

flex-wrap: wrap;

}

.visuals {

margin-left: 10px;

}

.photos img {

max-width: 150px;

padding: 5px;

}

</style>

</head>

<body>

<h2>Take a Photo with Your Voice</h2>

<label>

Camera:

<select id="select-camera"></select>

</label>

<label>

Microphone:

<select id="select-microphone"></select>

</label>

<label>

Resolution:

<select id="select-resolution">

<option value="640x480">640x480</option>

<option value="1280x720">1280x720</option>

<option value="1920x1080" selected>1920x1080</option>

<option value="3840x2160">3840x2160</option>

</select>

</label>

<button onclick="startCameraAndMicrophone();">Start</button>

<button onclick="stopCameraAndMicrophone();">Stop</button>

<button onclick="analyse();">Analyse</button>

<div class="output">

<div class="devices">

<div>

<video controls autoplay playsinline id="video"></video>

</div>

<div>

<audio autoplay controls id="audio"></audio>

</div>

</div>

<div class="visuals">

<canvas id="oscilloscope"></canvas>

<div id="note"></div>

</div>

</div>

<div class="photos"></div>

</body>

</html>

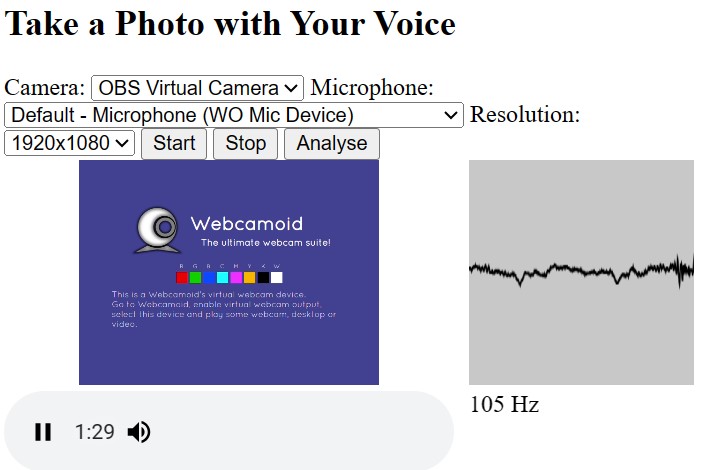

It can select which camera, which resolution and which microphone to use. It can draw the audio graph on a canvas and detect its pitch. If the pitch is over a threshold, take a photo.

Screenshot:

Request Permissions

Request camera permission:

async function requestCameraPermission() {

try {

const constraints = {video: true, audio: false};

const stream = await navigator.mediaDevices.getUserMedia(constraints);

closeStream(stream);

} catch (error) {

console.log(error);

throw error;

}

}

function closeStream(stream){

if (stream) {

const tracks = stream.getTracks();

for (let i=0;i<tracks.length;i++) {

const track = tracks[i];

track.stop(); // stop the opened tracks

}

}

}

Request microphone permission:

async function requestMicroPhonePermission() {

try {

const constraints = {video: false, audio: true};

const stream = await navigator.mediaDevices.getUserMedia(constraints);

closeStream(stream);

} catch (error) {

console.log(error);

throw error;

}

}

List Devices

List cameras:

async function listCameras(){

let cameraSelect = document.getElementById("select-camera");

let allDevices = await navigator.mediaDevices.enumerateDevices();

for (let i = 0; i < allDevices.length; i++){

let device = allDevices[i];

if (device.kind == 'videoinput'){

cameras.push(device);

cameraSelect.appendChild(new Option(device.label,device.deviceId));

}

}

}

List microphones:

async function listMicrophones(){

let microphoneSelect = document.getElementById("select-microphone");

let allDevices = await navigator.mediaDevices.enumerateDevices();

for (let i=0;i<allDevices.length;i++){

let device = allDevices[i];

if (device.kind == 'audioinput'){

microphones.push(device);

microphoneSelect.appendChild(new Option(device.label,device.deviceId));

}

}

}

Open the Camera and the Microphone

Open the camera and the microphone with the following code:

async function startCameraAndMicrophone(){

let selectedCamera = cameras[document.getElementById("select-camera").selectedIndex];

closeStream(document.getElementById("video").srcObject);

let selectedResolution = document.getElementById("select-resolution").selectedOptions[0].value;

let width = parseInt(selectedResolution.split("x")[0]);

let height = parseInt(selectedResolution.split("x")[1]);

const videoConstraints = {video: {deviceId: selectedCamera}, audio: false};

const cameraStream = await navigator.mediaDevices.getUserMedia(videoConstraints);

document.getElementById("video").srcObject = cameraStream;

let selectedMicrophone = microphones[document.getElementById("select-microphone").selectedIndex];

closeStream(document.getElementById("audio").srcObject);

const audioConstraints = {video: false, audio: {deviceId: selectedMicrophone}};

const audioStream = await navigator.mediaDevices.getUserMedia(audioConstraints);

document.getElementById("audio").srcObject = audioStream;

}

We can stop them with the following code:

function stopCameraAndMicrophone(){

closeStream(document.getElementById("video").srcObject);

closeStream(document.getElementById("audio").srcObject);

document.getElementById("video").srcObject = null;

document.getElementById("audio").srcObject = null

}

Detect Pitch

Next, let’s use the Web Audio API to detect the pitch to know if there is a voice activity.

-

Create an analyser.

let audioCtx = new (window.AudioContext || window.webkitAudioContext)(); let analyser = audioCtx.createAnalyser(); analyser.fftSize = 2048; -

Connect the audio to the analyser.

let stream = document.getElementById("audio").srcObject; let source = audioCtx.createMediaStreamSource(stream); source.connect(analyser); -

We can visualize the audio by drawing its graph on a canvas.

let bufferLength = analyser.frequencyBinCount; let dataArray = new Uint8Array(bufferLength); analyser.getByteTimeDomainData(dataArray); let canvas = document.getElementById("oscilloscope"); let canvasCtx = canvas.getContext("2d"); function draw() { if (!document.getElementById("audio").srcObject){ return; } drawVisual = requestAnimationFrame(draw); analyser.getByteTimeDomainData(dataArray); canvasCtx.fillStyle = "rgb(200, 200, 200)"; canvasCtx.fillRect(0, 0, canvas.width, canvas.height); canvasCtx.lineWidth = 2; canvasCtx.strokeStyle = "rgb(0, 0, 0)"; canvasCtx.beginPath(); let sliceWidth = (canvas.width * 1.0) / bufferLength; let x = 0; for (let i = 0; i < bufferLength; i++) { let v = dataArray[i] / 128.0; let y = (v * canvas.height) / 2; if (i === 0) { canvasCtx.moveTo(x, y); } else { canvasCtx.lineTo(x, y); } x += sliceWidth; } canvasCtx.lineTo(canvas.width, canvas.height / 2); canvasCtx.stroke(); } draw(); -

We can detect the pitch in the meantime of drawing the graph. The result is made smooth by calculating the similarity of consecutive values.

let previousValueToDisplay = 0; let smoothingCount = 0; let smoothingThreshold = 10; let smoothingCountThreshold = 5; let calculatePitch = function() { console.log("calculatePitch"); if (!document.getElementById("audio").srcObject){ return; } let bufferLength = analyser.fftSize; let buffer = new Float32Array(bufferLength); analyser.getFloatTimeDomainData(buffer); let autoCorrelateValue = autoCorrelate(buffer, audioCtx.sampleRate); if (autoCorrelateValue === -1) { document.getElementById('note').innerText = 'Too quiet...'; return; } // Handle rounding let valueToDisplay = autoCorrelateValue; valueToDisplay = Math.round(valueToDisplay); function voiceIsSimilarEnough() { // Check threshold for number let diff = Math.abs(valueToDisplay - previousValueToDisplay); return diff < smoothingThreshold; } // Check if this value has been within the given range for n iterations if (noteIsSimilarEnough()) { if (smoothingCount < smoothingCountThreshold) { smoothingCount++; return; } else { previousValueToDisplay = valueToDisplay; smoothingCount = 0; } } else { previousValueToDisplay = valueToDisplay; smoothingCount = 0; return; } valueToDisplay += ' Hz'; document.getElementById('note').innerText = valueToDisplay; }Here, we are using the following function to get the pitch from the audio data using

autocorrelation.// Must be called on analyser.getFloatTimeDomainData and audioContext.sampleRate // From https://github.com/cwilso/PitchDetect/pull/23 function autoCorrelate(buffer, sampleRate) { // Perform a quick root-mean-square to see if we have enough signal let SIZE = buffer.length; let sumOfSquares = 0; for (let i = 0; i < SIZE; i++) { let val = buffer[i]; sumOfSquares += val * val; } let rootMeanSquare = Math.sqrt(sumOfSquares / SIZE) if (rootMeanSquare < 0.01) { return -1; } // Find a range in the buffer where the values are below a given threshold. let r1 = 0; let r2 = SIZE - 1; let threshold = 0.2; // Walk up for r1 for (let i = 0; i < SIZE / 2; i++) { if (Math.abs(buffer[i]) < threshold) { r1 = i; break; } } // Walk down for r2 for (let i = 1; i < SIZE / 2; i++) { if (Math.abs(buffer[SIZE - i]) < threshold) { r2 = SIZE - i; break; } } // Trim the buffer to these ranges and update SIZE. buffer = buffer.slice(r1, r2); SIZE = buffer.length // Create a new array of the sums of offsets to do the autocorrelation let c = new Array(SIZE).fill(0); // For each potential offset, calculate the sum of each buffer value times its offset value for (let i = 0; i < SIZE; i++) { for (let j = 0; j < SIZE - i; j++) { c[i] = c[i] + buffer[j] * buffer[j+i] } } // Find the last index where that value is greater than the next one (the dip) let d = 0; while (c[d] > c[d+1]) { d++; } // Iterate from that index through the end and find the maximum sum let maxValue = -1; let maxIndex = -1; for (let i = d; i < SIZE; i++) { if (c[i] > maxValue) { maxValue = c[i]; maxIndex = i; } } let T0 = maxIndex; // Not as sure about this part, don't @ me // From the original author: // interpolation is parabolic interpolation. It helps with precision. We suppose that a parabola pass through the // three points that comprise the peak. 'a' and 'b' are the unknowns from the linear equation system and b/(2a) is // the "error" in the abscissa. Well x1,x2,x3 should be y1,y2,y3 because they are the ordinates. let x1 = c[T0 - 1]; let x2 = c[T0]; let x3 = c[T0 + 1] let a = (x1 + x3 - 2 * x2) / 2; let b = (x3 - x1) / 2 if (a) { T0 = T0 - b / (2 * a); } return sampleRate/T0; }

Take a Photo after the Voice is Detected

If we successfully detect the pitch of our voice, take a photo.

function takePhoto(){

let currentTime = Date.now();

if (currentTime - photoTakenTime < 1000*2) {

console.log("within 2 seconds since last capture");

return;

}

photoTakenTime = currentTime;

let video = document.getElementById("video");

let canvas = document.createElement("canvas");

let w = video.videoWidth;

var h = video.videoHeight;

canvas.width = w;

canvas.height = h;

let ctx = canvas.getContext('2d');

ctx.drawImage(video, 0, 0, w, h);

let img = document.createElement("img");

img.src = canvas.toDataURL();

document.getElementsByClassName("photos")[0].appendChild(img);

}

Integrate the Voice Detection Function to a Document Scanning App

Next, let’s integrate the voice detection function into a document scanning app using Mobile Web Capture.

Build a Web Document Scanning App

-

Create a new HTML file.

Similar to the previous file, it can select which camera, which microphone and which resolution to use.

<!DOCTYPE html> <html> <head> <title>Scan Documents with Your Voice</title> <meta name="viewport" content="width=device-width,initial-scale=1.0,maximum-scale=1.0,user-scalable=0" /> <style> #audio { display: none; } #container { max-width: 100%; height: 480px; } </style> </head> <body> <h2>Scan Documents with Your Voice</h2> <label> Camera: <select id="select-camera"></select> </label> <label> Microphone: <select id="select-microphone"></select> </label> <label> Resolution: <select id="select-resolution"> <option value="640x480">640x480</option> <option value="1280x720">1280x720</option> <option value="1920x1080" selected>1920x1080</option> <option value="3840x2160">3840x2160</option> </select> </label> <button onclick="startScanning();">Start</button> <div id="container"></div> <audio autoplay controls muted id="audio"></audio> </body> </html> -

Include the libraries of Mobile Web Capture in the head.

<script src="https://cdn.jsdelivr.net/npm/dynamsoft-core@3.0.30/dist/core.js"></script> <script src="https://cdn.jsdelivr.net/npm/dynamsoft-license@3.0.20/dist/license.js"></script> <script src="https://cdn.jsdelivr.net/npm/dynamsoft-document-normalizer@2.0.20/dist/ddn.js"></script> <script src="https://cdn.jsdelivr.net/npm/dynamsoft-capture-vision-router@2.0.30/dist/cvr.js"></script> <script src="https://cdn.jsdelivr.net/npm/dynamsoft-document-viewer@1.1.0/dist/ddv.js"></script> <link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/dynamsoft-document-viewer@1.1.0/dist/ddv.css"> -

Initialize the core libraries with a license. You can apply for your license here.

async function initMobileWebCapture(){ Dynamsoft.Core.CoreModule.loadWasm(["DDN"]); Dynamsoft.DDV.Core.loadWasm(); // Initialize DDN await Dynamsoft.License.LicenseManager.initLicense( "LICENSE-KEY", true ); // Initialize DDV await Dynamsoft.DDV.Core.init(); } -

Use Dynamsoft Document Normalizer as the document detection handler for Dynamsoft Document Viewer (Mobile Web Capture is based on the two products).

async function initDocDetectModule(DDV, CVR) { const router = await CVR.CaptureVisionRouter.createInstance(); await router.initSettings("{\"CaptureVisionTemplates\": [{\"Name\": \"Default\"},{\"Name\": \"DetectDocumentBoundaries_Default\",\"ImageROIProcessingNameArray\": [\"roi-detect-document-boundaries\"]},{\"Name\": \"DetectAndNormalizeDocument_Default\",\"ImageROIProcessingNameArray\": [\"roi-detect-and-normalize-document\"]},{\"Name\": \"NormalizeDocument_Binary\",\"ImageROIProcessingNameArray\": [\"roi-normalize-document-binary\"]}, {\"Name\": \"NormalizeDocument_Gray\",\"ImageROIProcessingNameArray\": [\"roi-normalize-document-gray\"]}, {\"Name\": \"NormalizeDocument_Color\",\"ImageROIProcessingNameArray\": [\"roi-normalize-document-color\"]}],\"TargetROIDefOptions\": [{\"Name\": \"roi-detect-document-boundaries\",\"TaskSettingNameArray\": [\"task-detect-document-boundaries\"]},{\"Name\": \"roi-detect-and-normalize-document\",\"TaskSettingNameArray\": [\"task-detect-and-normalize-document\"]},{\"Name\": \"roi-normalize-document-binary\",\"TaskSettingNameArray\": [\"task-normalize-document-binary\"]}, {\"Name\": \"roi-normalize-document-gray\",\"TaskSettingNameArray\": [\"task-normalize-document-gray\"]}, {\"Name\": \"roi-normalize-document-color\",\"TaskSettingNameArray\": [\"task-normalize-document-color\"]}],\"DocumentNormalizerTaskSettingOptions\": [{\"Name\": \"task-detect-and-normalize-document\",\"SectionImageParameterArray\": [{\"Section\": \"ST_REGION_PREDETECTION\",\"ImageParameterName\": \"ip-detect-and-normalize\"},{\"Section\": \"ST_DOCUMENT_DETECTION\",\"ImageParameterName\": \"ip-detect-and-normalize\"},{\"Section\": \"ST_DOCUMENT_NORMALIZATION\",\"ImageParameterName\": \"ip-detect-and-normalize\"}]},{\"Name\": \"task-detect-document-boundaries\",\"TerminateSetting\": {\"Section\": \"ST_DOCUMENT_DETECTION\"},\"SectionImageParameterArray\": [{\"Section\": \"ST_REGION_PREDETECTION\",\"ImageParameterName\": \"ip-detect\"},{\"Section\": \"ST_DOCUMENT_DETECTION\",\"ImageParameterName\": \"ip-detect\"},{\"Section\": \"ST_DOCUMENT_NORMALIZATION\",\"ImageParameterName\": \"ip-detect\"}]},{\"Name\": \"task-normalize-document-binary\",\"StartSection\": \"ST_DOCUMENT_NORMALIZATION\", \"ColourMode\": \"ICM_BINARY\",\"SectionImageParameterArray\": [{\"Section\": \"ST_REGION_PREDETECTION\",\"ImageParameterName\": \"ip-normalize\"},{\"Section\": \"ST_DOCUMENT_DETECTION\",\"ImageParameterName\": \"ip-normalize\"},{\"Section\": \"ST_DOCUMENT_NORMALIZATION\",\"ImageParameterName\": \"ip-normalize\"}]}, {\"Name\": \"task-normalize-document-gray\", \"ColourMode\": \"ICM_GRAYSCALE\",\"StartSection\": \"ST_DOCUMENT_NORMALIZATION\",\"SectionImageParameterArray\": [{\"Section\": \"ST_REGION_PREDETECTION\",\"ImageParameterName\": \"ip-normalize\"},{\"Section\": \"ST_DOCUMENT_DETECTION\",\"ImageParameterName\": \"ip-normalize\"},{\"Section\": \"ST_DOCUMENT_NORMALIZATION\",\"ImageParameterName\": \"ip-normalize\"}]}, {\"Name\": \"task-normalize-document-color\", \"ColourMode\": \"ICM_COLOUR\",\"StartSection\": \"ST_DOCUMENT_NORMALIZATION\",\"SectionImageParameterArray\": [{\"Section\": \"ST_REGION_PREDETECTION\",\"ImageParameterName\": \"ip-normalize\"},{\"Section\": \"ST_DOCUMENT_DETECTION\",\"ImageParameterName\": \"ip-normalize\"},{\"Section\": \"ST_DOCUMENT_NORMALIZATION\",\"ImageParameterName\": \"ip-normalize\"}]}],\"ImageParameterOptions\": [{\"Name\": \"ip-detect-and-normalize\",\"BinarizationModes\": [{\"Mode\": \"BM_LOCAL_BLOCK\",\"BlockSizeX\": 0,\"BlockSizeY\": 0,\"EnableFillBinaryVacancy\": 0}],\"TextDetectionMode\": {\"Mode\": \"TTDM_WORD\",\"Direction\": \"HORIZONTAL\",\"Sensitivity\": 7}},{\"Name\": \"ip-detect\",\"BinarizationModes\": [{\"Mode\": \"BM_LOCAL_BLOCK\",\"BlockSizeX\": 0,\"BlockSizeY\": 0,\"EnableFillBinaryVacancy\": 0,\"ThresholdCompensation\" : 7}],\"TextDetectionMode\": {\"Mode\": \"TTDM_WORD\",\"Direction\": \"HORIZONTAL\",\"Sensitivity\": 7},\"ScaleDownThreshold\" : 512},{\"Name\": \"ip-normalize\",\"BinarizationModes\": [{\"Mode\": \"BM_LOCAL_BLOCK\",\"BlockSizeX\": 0,\"BlockSizeY\": 0,\"EnableFillBinaryVacancy\": 0}],\"TextDetectionMode\": {\"Mode\": \"TTDM_WORD\",\"Direction\": \"HORIZONTAL\",\"Sensitivity\": 7}}]}"); class DDNNormalizeHandler extends DDV.DocumentDetect { async detect(image, config) { if (!router) { return Promise.resolve({ success: false }); }; let width = image.width; let height = image.height; let ratio = 1; let data; if (height > 720) { ratio = height / 720; height = 720; width = Math.floor(width / ratio); data = compress(image.data, image.width, image.height, width, height); } else { data = image.data.slice(0); } // Define DSImage according to the usage of DDN const DSImage = { bytes: new Uint8Array(data), width, height, stride: width * 4, //RGBA format: 10 // IPF_ABGR_8888 }; // Use DDN normalized module const results = await router.capture(DSImage, 'DetectDocumentBoundaries_Default'); // Filter the results and generate corresponding return values if (results.items.length <= 0) { return Promise.resolve({ success: false }); }; const quad = []; results.items[0].location.points.forEach((p) => { quad.push([p.x * ratio, p.y * ratio]); }); const detectResult = this.processDetectResult({ location: quad, width: image.width, height: image.height, config }); return Promise.resolve(detectResult); } } DDV.setProcessingHandler('documentBoundariesDetect', new DDNNormalizeHandler()) } function compress( imageData, imageWidth, imageHeight, newWidth, newHeight, ) { let source = null; try { source = new Uint8ClampedArray(imageData); } catch (error) { source = new Uint8Array(imageData); } const scaleW = newWidth / imageWidth; const scaleH = newHeight / imageHeight; const targetSize = newWidth * newHeight * 4; const targetMemory = new ArrayBuffer(targetSize); let distData = null; try { distData = new Uint8ClampedArray(targetMemory, 0, targetSize); } catch (error) { distData = new Uint8Array(targetMemory, 0, targetSize); } const filter = (distCol, distRow) => { const srcCol = Math.min(imageWidth - 1, distCol / scaleW); const srcRow = Math.min(imageHeight - 1, distRow / scaleH); const intCol = Math.floor(srcCol); const intRow = Math.floor(srcRow); let distI = (distRow * newWidth) + distCol; let srcI = (intRow * imageWidth) + intCol; distI *= 4; srcI *= 4; for (let j = 0; j <= 3; j += 1) { distData[distI + j] = source[srcI + j]; } }; for (let col = 0; col < newWidth; col += 1) { for (let row = 0; row < newHeight; row += 1) { filter(col, row); } } return distData; } // Configure document boundaries function await initDocDetectModule(Dynamsoft.DDV, Dynamsoft.CVR); -

Use the default image filter used in the image editor.

// Configure image filter feature which is in edit viewer Dynamsoft.DDV.setProcessingHandler("imageFilter", new Dynamsoft.DDV.ImageFilter()); -

Create instances of the three viewers: Capture Viewer which opens the camera and scans a document, Perspective Viewer which detects and modifies the document boundaries and Edit Viewer which allows editing and viewing the scanned documents. We need to configure their UIs and then create instances.

-

Initialize Capture Viewer.

const captureViewerUiConfig = { type: Dynamsoft.DDV.Elements.Layout, flexDirection: "column", children: [ { type: Dynamsoft.DDV.Elements.Layout, className: "ddv-capture-viewer-header-mobile", children: [ { type: "CameraResolution", className: "ddv-capture-viewer-resolution", }, Dynamsoft.DDV.Elements.Flashlight, ], }, Dynamsoft.DDV.Elements.MainView, { type: Dynamsoft.DDV.Elements.Layout, className: "ddv-capture-viewer-footer-mobile", children: [ Dynamsoft.DDV.Elements.AutoDetect, Dynamsoft.DDV.Elements.AutoCapture, { type: "Capture", className: "ddv-capture-viewer-captureButton", }, { // Bind click event to "ImagePreview" element // The event will be registered later. type: Dynamsoft.DDV.Elements.ImagePreview, events:{ click: "showPerspectiveViewer" } }, Dynamsoft.DDV.Elements.CameraConvert, ], }, ], }; // Create a capture viewer captureViewer = new Dynamsoft.DDV.CaptureViewer({ container: "container", uiConfig: captureViewerUiConfig, viewerConfig: { acceptedPolygonConfidence: 60, enableAutoDetect: false, } }); -

Initialize Perspective Viewer.

const perspectiveUiConfig = { type: Dynamsoft.DDV.Elements.Layout, flexDirection: "column", children: [ { type: Dynamsoft.DDV.Elements.Layout, className: "ddv-perspective-viewer-header-mobile", children: [ { // Add a "Back" button in perspective viewer's header and bind the event to go back to capture viewer. // The event will be registered later. type: Dynamsoft.DDV.Elements.Button, className: "ddv-button-back", events:{ click: "backToCaptureViewer" } }, Dynamsoft.DDV.Elements.Pagination, { // Bind event for "PerspectiveAll" button to show the edit viewer // The event will be registered later. type: Dynamsoft.DDV.Elements.PerspectiveAll, events:{ click: "showEditViewer" } }, ], }, Dynamsoft.DDV.Elements.MainView, { type: Dynamsoft.DDV.Elements.Layout, className: "ddv-perspective-viewer-footer-mobile", children: [ Dynamsoft.DDV.Elements.FullQuad, Dynamsoft.DDV.Elements.RotateLeft, Dynamsoft.DDV.Elements.RotateRight, Dynamsoft.DDV.Elements.DeleteCurrent, Dynamsoft.DDV.Elements.DeleteAll, ], }, ], }; // Create a perspective viewer perspectiveViewer = new Dynamsoft.DDV.PerspectiveViewer({ container: "container", groupUid: captureViewer.groupUid, uiConfig: perspectiveUiConfig, viewerConfig: { scrollToLatest: true, } }); perspectiveViewer.hide(); -

Initialize Edit Viewer.

const editViewerUiConfig = { type: Dynamsoft.DDV.Elements.Layout, flexDirection: "column", className: "ddv-edit-viewer-mobile", children: [ { type: Dynamsoft.DDV.Elements.Layout, className: "ddv-edit-viewer-header-mobile", children: [ { // Add a "Back" buttom to header and bind click event to go back to the perspective viewer // The event will be registered later. type: Dynamsoft.DDV.Elements.Button, className: "ddv-button-back", events:{ click: "backToPerspectiveViewer" } }, Dynamsoft.DDV.Elements.Pagination, Dynamsoft.DDV.Elements.Download, ], }, Dynamsoft.DDV.Elements.MainView, { type: Dynamsoft.DDV.Elements.Layout, className: "ddv-edit-viewer-footer-mobile", children: [ Dynamsoft.DDV.Elements.DisplayMode, Dynamsoft.DDV.Elements.RotateLeft, Dynamsoft.DDV.Elements.Crop, Dynamsoft.DDV.Elements.Filter, Dynamsoft.DDV.Elements.Undo, Dynamsoft.DDV.Elements.Delete, Dynamsoft.DDV.Elements.Load, ], }, ], }; // Create an edit viewer editViewer = new Dynamsoft.DDV.EditViewer({ container: "container", groupUid: captureViewer.groupUid, uiConfig: editViewerUiConfig }); editViewer.hide(); -

Define functions to make the three viewers work together.

// Register an event in `captureViewer` to show the perspective viewer captureViewer.on("showPerspectiveViewer",() => { switchViewer(0,1,0); }); // Register an event in `perspectiveViewer` to go back the capture viewer perspectiveViewer.on("backToCaptureViewer",() => { switchViewer(1,0,0); captureViewer.play().catch(err => {alert(err.message)}); }); // Register an event in `perspectiveViewer` to show the edit viewer perspectiveViewer.on("showEditViewer",() => { switchViewer(0,0,1) }); // Register an event in `editViewer` to go back the perspective viewer editViewer.on("backToPerspectiveViewer",() => { switchViewer(0,1,0); }); // Define a function to control the viewers' visibility const switchViewer = (c,p,e) => { captureViewer.hide(); perspectiveViewer.hide(); editViewer.hide(); if(c) { captureViewer.show(); } else { captureViewer.stop(); } if(p) perspectiveViewer.show(); if(e) editViewer.show(); };

-

-

Start scanning by starting Mobile Web Capture’s Capture Viewer.

async function startScanning(){ let selectedCamera = cameras[document.getElementById("select-camera").selectedIndex]; await captureViewer.selectCamera(selectedCamera.deviceId); let selectedResolution = document.getElementById("select-resolution").selectedOptions[0].value; let width = parseInt(selectedResolution.split("x")[0]); let height = parseInt(selectedResolution.split("x")[1]); captureViewer.play({ resolution: [width,height], }).catch(err => { alert(err.message) }); }

Use Voice Detection to Take a Photo

-

In Capture Viewer’s UI config, add a voice icon to the header.

{ type: Dynamsoft.DDV.Elements.Layout, className: "ddv-capture-viewer-header-mobile", children: [ { type: "CameraResolution", className: "ddv-capture-viewer-resolution", }, + { + type: Dynamsoft.DDV.Elements.Button, + className: "voice-icon", + style: { + display: "flex", + }, + events: { + click: "enableVoiceDetection", + }, + }, Dynamsoft.DDV.Elements.Flashlight, ], },The style of the icon:

.voice-icon { background-image: url("./voice.svg"); background-position: top; background-size: contain; background-repeat: no-repeat; filter: invert(1); } .voice-icon.enabled { filter: invert(1) sepia(100%) saturate(100) hue-rotate(1400deg); } -

Register the event for the voice icon button.

captureViewer.on("enableVoiceDetection",e => { console.log("click"); let icon = document.getElementsByClassName("voice-icon")[0]; if (icon.classList.contains("enabled")) { icon.classList.remove("enabled"); toggleVoiceDetection(false); }else{ icon.classList.add("enabled"); toggleVoiceDetection(true); } }); async function toggleVoiceDetection(enabled){ let audio = document.getElementById("audio"); if (enabled) { if (!audio.srcObject) { //open the microphone for detection let selectedMicrophone = microphones[document.getElementById("select-microphone").selectedIndex]; const audioConstraints = {video: false, audio: {deviceId: selectedMicrophone}}; const audioStream = await navigator.mediaDevices.getUserMedia(audioConstraints); audio.srcObject = audioStream; startVoiceDetection(); } }else{ stopVoiceDetection(); closeStream(audio.srcObject); audio.srcObject = null } } -

Start an interval to detect the pitch if it is enabled. The detection code is the same with the code in the previous part.

let interval; let processing = false; function startVoiceDetection(){ if (!document.getElementById("audio").srcObject){ return; } stopVoiceDetection(); audioCtx = new (window.AudioContext || window.webkitAudioContext)(); analyser = audioCtx.createAnalyser(); let stream = document.getElementById("audio").srcObject; let source = audioCtx.createMediaStreamSource(stream); source.connect(analyser); analyser.fftSize = 2048; const analyse = () => { processing = true; let bufferLength = analyser.fftSize; let buffer = new Float32Array(bufferLength); analyser.getFloatTimeDomainData(buffer); let autoCorrelateValue = autoCorrelate(buffer, audioCtx.sampleRate); processing = false; if (autoCorrelateValue === -1) { //'Too quiet...'; return; } // Handle rounding let valueToDisplay = autoCorrelateValue; valueToDisplay = Math.round(valueToDisplay); function noteIsSimilarEnough() { // Check threshold for number, or just difference for notes. let diff = Math.abs(valueToDisplay - previousValueToDisplay); return diff < smoothingThreshold; } // Check if this value has been within the given range for n iterations if (noteIsSimilarEnough()) { if (smoothingCount < smoothingCountThreshold) { smoothingCount++; console.log("threshold not meet"); return; } else { previousValueToDisplay = valueToDisplay; smoothingCount = 0; } } else { previousValueToDisplay = valueToDisplay; smoothingCount = 0; return; } takePhoto(); } interval = setInterval(analyse,0) } function stopVoiceDetection(){ processing = false; previousValueToDisplay = 0; clearInterval(interval); } -

Use Capture Viewer to take a photo.

async function takePhoto(){ let currentTime = Date.now(); if (currentTime - photoTakenTime < 1000*2) { console.log("within 2 seconds since last capture"); return; } await captureViewer.capture(); photoTakenTime = currentTime; }

Limitations

Only pitch is calculated for voice activity detection. It may not work in a noisy environment. The detection can be improved using the Web Speech API to recognize the words we speak so that we can use voice commands like “take photo” to trigger the photo taking. However, the current way is language-agnostic, which may be an advantage over speech recognition.

Source Code

Get the source code of the demos to have a try: